Dell Latitude E6410 Notebook| Quantity Available: 40+

This post is intended for businesses and other organizations interested... Read more →

Posted by Richy George on 29 April, 2024

At the end of March 2024, Mike Stonebraker announced in a blog post the release of DBOS Cloud, “a transactional serverless computing platform, made possible by a revolutionary new operating system, DBOS, that implements OS services on top of a distributed database.” That sounds odd, to put it mildly, but it makes more sense when you read the origin story:

The idea for DBOS (DataBase oriented Operating System) originated 3 years ago with my realization that the state an operating system must maintain (files, processes, threads, messages, etc.) has increased in size by about 6 orders of magnitude since I began using Unix on a PDP-11/40 in 1973. As such, storing OS state is a database problem. Also, Linux is legacy code at the present time and is having difficulty making forward progress. For example there is no multi-node version of Linux, requiring people to run an orchestrator such as Kubernetes. When I heard a talk by Matei Zaharia in which he said Databricks could not use traditional OS scheduling technology at the scale they were running and had turned to a DBMS solution instead, it was clear that it was time to move the DBMS into the kernel and build a new operating system.”

If you don’t know Stonebraker, he’s been a database-focused computer scientist (and professor) since the early 1970s, when he and his UC Berkeley colleagues Eugene Wong and Larry Rowe founded Ingres. Ingres later inspired Sybase, which was eventually the basis for Microsoft SQL Server. After selling Ingres to Computer Associates, Stonebraker and Rowe started researching Postgres, which later became PostgreSQL and also evolved into Illustra, which was purchased by Informix.

I heard Stonebraker talk about Postgres at a DBMS conference in 1980. What I got out of that talk, aside from an image of “jungle drums” calling for SQL, was the idea that you could add support for complex data types to the database by implementing new index types, extending the query language, and adding support for that to the query parser and optimizer. The example he used was geospatial information, and he explained one kind of index structure that would make 2D geometric database queries go very fast. (This facility eventually became PostGIS. The R-tree currently used by default in PostGIS GiST indexes wasn’t invented until 1984, so Mike was probably talking about the older quadtree index.)

Skipping ahead 44 years, it should surprise precisely nobody in the database field that DBOS uses a distributed version of PostgreSQL as its kernel database layer.

The DBOS system diagram makes it clear that a database is part of the OS kernel. The distributed database relies on a minimal kernel, but sits under the OS services instead of running in the application layer as a normal database would.

DBOS Transact, an open-source TypeScript framework, supports Postgres-compatible transactions, reliable workflow orchestration, HTTP serving using GET and POST, communication with external services and third-party APIs, idempotent requests using UUID keys, authentication and authorization, Kafka integration with exactly-once semantics, unit testing, and self-hosting. DBOS Cloud, a transactional serverless platform for deploying DBOS Transact applications, supports serverless app deployment, time-travel debugging, cloud database management, and observability.

Let’s highlight some major areas of interest.

The code shown in the screenshot below demonstrates transactions, as well as HTTP serving using GET. It’s worthwhile to read the code closely. It’s only 18 lines, not counting blank lines.

The first import (line 1) brings in the DBOS SDK classes that we’ll need. The second import (line 2) brings in the Knex.js SQL query builder, which handles sending the parameterized query to the Postgres database and returning the resulting rows. The database table schema is defined in lines 4 through 8; the only columns are a name string and a greet_count integer.

There is only one method in the Hello class, helloTransaction. It is wrapped in @GetApi and @Transaction decorators, which respectively cause the method to be served in response to an HTTP GET request on the path /greeting/ followed by the username parameter you want to pass in and wrap the database call in a transaction, so that two instances can’t update the database simultaneously.

The database query string (line 16) uses PostgreSQL syntax to try to insert a row into the database for the supplied name and an initial count of 1. If the row already exists, then the ON CONFLICT trigger runs an update operation that increments the count in the database.

Line 17 uses Knex.js to send the SQL query to the DBOS system database and retrieves the result. Line 18 pulls the count out of the first row of results and returns the greeting string to the calling program.

The use of SQL and a database for what feels like should be a core in-memory system API, such as a Linux atomic counter or a Windows interlocked variable, seems deeply weird. Nevertheless, it works.

This TypeScript code for a Hello class is generated when you perform a DBOS create operation. As you can see, it relies on the @GetApi and @Transaction decorators to serve the function from HTTP GET requests and run the function as a database transaction.

When you run an application in DBOS Cloud it records every step and change it makes (the workflow) in the database. You can debug that using Visual Studio Code and the DBOS Time Travel Debugger extension. The time-travel debugger allows you to debug your DBOS application against the database as it existed at the time the selected workflow originally executed.

To perform time-travel debugging, you first start with a CodeLens to list saved trace workflows. Once you choose the one you want, you can debug it using Visual Studio Code with a plugin, or from the command line.

Time-travel debugging with a saved workflow looks very much like ordinary debugging in Visual Studio Code. The code being debugged is the same Hello class you saw earlier.

The DBOS Quickstart tutorial requires Node.js 20 or later and a PostgreSQL database you can connect to, either locally, in a Docker container, or remotely. I already had Node.js v20.9.0 installed on my M1 MacBook, but I upgraded it to v20.12.1 from the Node.js website.

I didn’t have PostgreSQL installed, so I downloaded and ran the interactive installer for v16.2 from EnterpriseDB. This installer creates a full-blown macOS server and applications. If I had used Homebrew instead, it would have created command-line applications, and if I had used Postgres.app, I would have gotten a menu-bar app.

The Quickstart proper starts by creating a DBOS app directory using Node.js.

martinheller@Martins-M1-MBP ~ % npx -y @dbos-inc/create@latest -n myapp Merged .gitignore files saved to myapp/.gitignore added 590 packages, and audited 591 packages in 25s found 0 vulnerabilities added 1 package, and audited 592 packages in 1s found 0 vulnerabilities added 129 packages, and audited 721 packages in 5s found 0 vulnerabilities Application initialized successfully!

Then you configure the app to use your Postgres server and export your Postgres password into an enviroment variable.

martinheller@Martins-M1-MBP ~ % cd myapp martinheller@Martins-M1-MBP myapp % npx dbos configure ? What is the hostname of your Postgres server? localhost ? What is the port of your Postgres server? 5432 ? What is your Postgres username? postgres martinheller@Martins-M1-MBP myapp % export PGPASSWORD=*********

After that, you create a “Hello” database using Node.js and Knex.js.

martinheller@Martins-M1-MBP myapp % npx dbos migrate 2024-04-09 15:01:42 [info]: Starting migration: creating database hello if it does not exist 2024-04-09 15:01:42 [info]: Database hello does not exist, creating... 2024-04-09 15:01:42 [info]: Executing migration command: npx knex migrate:latest 2024-04-09 15:01:43 [info]: Batch 1 run: 1 migrations 2024-04-09 15:01:43 [info]: Creating DBOS tables and system database. 2024-04-09 15:01:43 [info]: Migration successful!

With that complete, you build and run the DBOS app locally.

martinheller@Martins-M1-MBP myapp % npm run build npx dbos start > myapp@0.0.1 build > tsc 2024-04-09 15:02:30 [info]: Workflow executor initialized 2024-04-09 15:02:30 [info]: HTTP endpoints supported: 2024-04-09 15:02:30 [info]: GET : /greeting/:user 2024-04-09 15:02:30 [info]: DBOS Server is running at http://localhost:3000 2024-04-09 15:02:30 [info]: DBOS Admin Server is running at http://localhost:3001 ^C

At this point, you can browse to http://localhost:3000 to test the application. That done, you register for the DBOS Cloud and provision your own database there.

martinheller@Martins-M1-MBP myapp % npx dbos-cloud register -u meheller

2024-04-09 15:11:35 [info]: Welcome to DBOS Cloud!

2024-04-09 15:11:35 [info]: Before creating an account, please tell us a bit about yourself!

Enter First/Given Name: Martin

Enter Last/Family Name: Heller

Enter Company: self

2024-04-09 15:12:06 [info]: Please authenticate with DBOS Cloud!

Login URL: https://login.dbos.dev/activate?user_code=QWKW-TXTB

2024-04-09 15:12:12 [info]: Waiting for login...

2024-04-09 15:12:17 [info]: Waiting for login...

2024-04-09 15:12:22 [info]: Waiting for login...

2024-04-09 15:12:27 [info]: Waiting for login...

2024-04-09 15:12:32 [info]: Waiting for login...

2024-04-09 15:12:38 [info]: Waiting for login...

2024-04-09 15:12:44 [info]: meheller successfully registered!

martinheller@Martins-M1-MBP myapp % npx dbos-cloud db provision iw_db -U meheller

Database Password: ********

2024-04-09 15:19:22 [info]: Successfully started provisioning database: iw_db

2024-04-09 15:19:28 [info]: {"PostgresInstanceName":"iw_db","HostName":"userdb-51fcc211-6ed3-4450-a90e-0f864fc1066c.cvc4gmaa6qm9.us-east-1.rds.amazonaws.com","Status":"available","Port":5432,"DatabaseUsername":"meheller","AdminUsername":"meheller"}

2024-04-09 15:19:28 [info]: Database successfully provisioned!

Finally, you can register and deploy your app in the DBOS Cloud.

martinheller@Martins-M1-MBP myapp % npx dbos-cloud app register -d iw_db 2024-04-09 15:20:09 [info]: Loaded application name from package.json: myapp 2024-04-09 15:20:09 [info]: Registering application: myapp 2024-04-09 15:20:11 [info]: myapp ID: d8806829-c5b8-4df0-8b5a-2d1bf87c3322 2024-04-09 15:20:11 [info]: Successfully registered myapp! martinheller@Martins-M1-MBP myapp % npx dbos-cloud app deploy 2024-04-09 15:20:35 [info]: Loaded application name from package.json: myapp 2024-04-09 15:20:35 [info]: Submitting deploy request for myapp 2024-04-09 15:21:09 [info]: Submitted deploy request for myapp. Assigned version: 1712676035 2024-04-09 15:21:13 [info]: Waiting for myapp with version 1712676035 to be available 2024-04-09 15:21:21 [info]: Successfully deployed myapp! 2024-04-09 15:21:21 [info]: Access your application at https://meheller-myapp.cloud.dbos.dev/

The “Hello” application running in the DBOS Cloud counts every greeting. It uses the code you saw earlier.

The “Hello” application does illustrate some of the core features of DBOS Transact and the DBOS Cloud, but it’s so basic that it’s barely a toy. The Programming Quickstart adds a few more details, and it’s worth your time to go through it. You’ll learn how to use communicator functions to access third-party services (email, in this example) as well as how to compose reliable workflows. You’ll literally interrupt the workflow and restart it without re-sending the email: DBOS workflows always run to completion and each of their operations executes once and only once. That’s possible because DBOS persists the output of each step in your database.

Once you’ve understood the programming Quickstart, you’ll be ready to try out the two DBOS demo applications, which do rise to the level of being toys. Both demos use Next.js for their front ends, and both use DBOS workflows, transactions, and communicators.

The first demo, E-Commerce, is a web shopping and payment processing system. It’s worthwhile reading the Under the Covers section of the README in the demo’s repository to understand how it works and how you might want to upgrade it to, for example, use a real-world payment provider.

The second demo, YKY Social, simulates a simple social network, and uses TypeORM rather than Knex.js for its database code. It also uses Amazon S3 for profile photos. If you’re serious about using DBOS yourself, you should work though both demo applications.

I have to say that DBOS and DBOS Cloud look very interesting. Reliable execution and time-travel debugging, for example, are quite desirable. On the other hand, I wouldn’t want to build a real application on DBOS or DBOS Cloud at this point. I have lots of questions, starting with “How does it scale in practice?” and probably ending with “How much will it cost at X scale?”

I mentioned earlier that DBOS code looks weird but works. I would imagine that any programming shop considering writing an application on it would be discouraged or even repelled by the “it looks weird” part, as developers tend to be set in their ways until what they are doing no longer works.

I also have to point out that the current implementation of DBOS is very far from the system diagram you saw near the beginning of this review. Where’s the minimal kernel? DBOS currently runs on macOS, Linux, and Windows. None of those are minimal kernels. DBOS Cloud currently runs on AWS. Again, not a minimal kernel.

So, overall, DBOS is a tantalizing glimpse of something that may eventually turn out to be cool. It’s new and shiny, and it comes from smart people, but it will be awhile before it could possibly become a mainstream system.

—

Cost: Free with usage limits; paid plans require you to contact sales.

Platform: macOS, Linux, Windows, AWS.

Next read this:

Posted by Richy George on 29 April, 2024

At the end of March 2024, Mike Stonebraker announced in a blog post the release of DBOS Cloud, “a transactional serverless computing platform, made possible by a revolutionary new operating system, DBOS, that implements OS services on top of a distributed database.” That sounds odd, to put it mildly, but it makes more sense when you read the origin story:

The idea for DBOS (DataBase oriented Operating System) originated 3 years ago with my realization that the state an operating system must maintain (files, processes, threads, messages, etc.) has increased in size by about 6 orders of magnitude since I began using Unix on a PDP-11/40 in 1973. As such, storing OS state is a database problem. Also, Linux is legacy code at the present time and is having difficulty making forward progress. For example there is no multi-node version of Linux, requiring people to run an orchestrator such as Kubernetes. When I heard a talk by Matei Zaharia in which he said Databricks could not use traditional OS scheduling technology at the scale they were running and had turned to a DBMS solution instead, it was clear that it was time to move the DBMS into the kernel and build a new operating system.”

If you don’t know Stonebraker, he’s been a database-focused computer scientist (and professor) since the early 1970s, when he and his UC Berkeley colleagues Eugene Wong and Larry Rowe founded Ingres. Ingres later inspired Sybase, which was eventually the basis for Microsoft SQL Server. After selling Ingres to Computer Associates, Stonebraker and Rowe started researching Postgres, which later became PostgreSQL and also evolved into Illustra, which was purchased by Informix.

I heard Stonebraker talk about Postgres at a DBMS conference in 1980. What I got out of that talk, aside from an image of “jungle drums” calling for SQL, was the idea that you could add support for complex data types to the database by implementing new index types, extending the query language, and adding support for that to the query parser and optimizer. The example he used was geospatial information, and he explained one kind of index structure that would make 2D geometric database queries go very fast. (This facility eventually became PostGIS. The R-tree currently used by default in PostGIS GiST indexes wasn’t invented until 1984, so Mike was probably talking about the older quadtree index.)

Skipping ahead 44 years, it should surprise precisely nobody in the database field that DBOS uses a distributed version of PostgreSQL as its kernel database layer.

The DBOS system diagram makes it clear that a database is part of the OS kernel. The distributed database relies on a minimal kernel, but sits under the OS services instead of running in the application layer as a normal database would.

DBOS Transact, an open-source TypeScript framework, supports Postgres-compatible transactions, reliable workflow orchestration, HTTP serving using GET and POST, communication with external services and third-party APIs, idempotent requests using UUID keys, authentication and authorization, Kafka integration with exactly-once semantics, unit testing, and self-hosting. DBOS Cloud, a transactional serverless platform for deploying DBOS Transact applications, supports serverless app deployment, time-travel debugging, cloud database management, and observability.

Let’s highlight some major areas of interest.

The code shown in the screenshot below demonstrates transactions, as well as HTTP serving using GET. It’s worthwhile to read the code closely. It’s only 18 lines, not counting blank lines.

The first import (line 1) brings in the DBOS SDK classes that we’ll need. The second import (line 2) brings in the Knex.js SQL query builder, which handles sending the parameterized query to the Postgres database and returning the resulting rows. The database table schema is defined in lines 4 through 8; the only columns are a name string and a greet_count integer.

There is only one method in the Hello class, helloTransaction. It is wrapped in @GetApi and @Transaction decorators, which respectively cause the method to be served in response to an HTTP GET request on the path /greeting/ followed by the username parameter you want to pass in and wrap the database call in a transaction, so that two instances can’t update the database simultaneously.

The database query string (line 16) uses PostgreSQL syntax to try to insert a row into the database for the supplied name and an initial count of 1. If the row already exists, then the ON CONFLICT trigger runs an update operation that increments the count in the database.

Line 17 uses Knex.js to send the SQL query to the DBOS system database and retrieves the result. Line 18 pulls the count out of the first row of results and returns the greeting string to the calling program.

The use of SQL and a database for what feels like should be a core in-memory system API, such as a Linux atomic counter or a Windows interlocked variable, seems deeply weird. Nevertheless, it works.

This TypeScript code for a Hello class is generated when you perform a DBOS create operation. As you can see, it relies on the @GetApi and @Transaction decorators to serve the function from HTTP GET requests and run the function as a database transaction.

When you run an application in DBOS Cloud it records every step and change it makes (the workflow) in the database. You can debug that using Visual Studio Code and the DBOS Time Travel Debugger extension. The time-travel debugger allows you to debug your DBOS application against the database as it existed at the time the selected workflow originally executed.

To perform time-travel debugging, you first start with a CodeLens to list saved trace workflows. Once you choose the one you want, you can debug it using Visual Studio Code with a plugin, or from the command line.

Time-travel debugging with a saved workflow looks very much like ordinary debugging in Visual Studio Code. The code being debugged is the same Hello class you saw earlier.

The DBOS Quickstart tutorial requires Node.js 20 or later and a PostgreSQL database you can connect to, either locally, in a Docker container, or remotely. I already had Node.js v20.9.0 installed on my M1 MacBook, but I upgraded it to v20.12.1 from the Node.js website.

I didn’t have PostgreSQL installed, so I downloaded and ran the interactive installer for v16.2 from EnterpriseDB. This installer creates a full-blown macOS server and applications. If I had used Homebrew instead, it would have created command-line applications, and if I had used Postgres.app, I would have gotten a menu-bar app.

The Quickstart proper starts by creating a DBOS app directory using Node.js.

martinheller@Martins-M1-MBP ~ % npx -y @dbos-inc/create@latest -n myapp Merged .gitignore files saved to myapp/.gitignore added 590 packages, and audited 591 packages in 25s found 0 vulnerabilities added 1 package, and audited 592 packages in 1s found 0 vulnerabilities added 129 packages, and audited 721 packages in 5s found 0 vulnerabilities Application initialized successfully!

Then you configure the app to use your Postgres server and export your Postgres password into an enviroment variable.

martinheller@Martins-M1-MBP ~ % cd myapp martinheller@Martins-M1-MBP myapp % npx dbos configure ? What is the hostname of your Postgres server? localhost ? What is the port of your Postgres server? 5432 ? What is your Postgres username? postgres martinheller@Martins-M1-MBP myapp % export PGPASSWORD=*********

After that, you create a “Hello” database using Node.js and Knex.js.

martinheller@Martins-M1-MBP myapp % npx dbos migrate 2024-04-09 15:01:42 [info]: Starting migration: creating database hello if it does not exist 2024-04-09 15:01:42 [info]: Database hello does not exist, creating... 2024-04-09 15:01:42 [info]: Executing migration command: npx knex migrate:latest 2024-04-09 15:01:43 [info]: Batch 1 run: 1 migrations 2024-04-09 15:01:43 [info]: Creating DBOS tables and system database. 2024-04-09 15:01:43 [info]: Migration successful!

With that complete, you build and run the DBOS app locally.

martinheller@Martins-M1-MBP myapp % npm run build npx dbos start > myapp@0.0.1 build > tsc 2024-04-09 15:02:30 [info]: Workflow executor initialized 2024-04-09 15:02:30 [info]: HTTP endpoints supported: 2024-04-09 15:02:30 [info]: GET : /greeting/:user 2024-04-09 15:02:30 [info]: DBOS Server is running at http://localhost:3000 2024-04-09 15:02:30 [info]: DBOS Admin Server is running at http://localhost:3001 ^C

At this point, you can browse to http://localhost:3000 to test the application. That done, you register for the DBOS Cloud and provision your own database there.

martinheller@Martins-M1-MBP myapp % npx dbos-cloud register -u meheller

2024-04-09 15:11:35 [info]: Welcome to DBOS Cloud!

2024-04-09 15:11:35 [info]: Before creating an account, please tell us a bit about yourself!

Enter First/Given Name: Martin

Enter Last/Family Name: Heller

Enter Company: self

2024-04-09 15:12:06 [info]: Please authenticate with DBOS Cloud!

Login URL: https://login.dbos.dev/activate?user_code=QWKW-TXTB

2024-04-09 15:12:12 [info]: Waiting for login...

2024-04-09 15:12:17 [info]: Waiting for login...

2024-04-09 15:12:22 [info]: Waiting for login...

2024-04-09 15:12:27 [info]: Waiting for login...

2024-04-09 15:12:32 [info]: Waiting for login...

2024-04-09 15:12:38 [info]: Waiting for login...

2024-04-09 15:12:44 [info]: meheller successfully registered!

martinheller@Martins-M1-MBP myapp % npx dbos-cloud db provision iw_db -U meheller

Database Password: ********

2024-04-09 15:19:22 [info]: Successfully started provisioning database: iw_db

2024-04-09 15:19:28 [info]: {"PostgresInstanceName":"iw_db","HostName":"userdb-51fcc211-6ed3-4450-a90e-0f864fc1066c.cvc4gmaa6qm9.us-east-1.rds.amazonaws.com","Status":"available","Port":5432,"DatabaseUsername":"meheller","AdminUsername":"meheller"}

2024-04-09 15:19:28 [info]: Database successfully provisioned!

Finally, you can register and deploy your app in the DBOS Cloud.

martinheller@Martins-M1-MBP myapp % npx dbos-cloud app register -d iw_db 2024-04-09 15:20:09 [info]: Loaded application name from package.json: myapp 2024-04-09 15:20:09 [info]: Registering application: myapp 2024-04-09 15:20:11 [info]: myapp ID: d8806829-c5b8-4df0-8b5a-2d1bf87c3322 2024-04-09 15:20:11 [info]: Successfully registered myapp! martinheller@Martins-M1-MBP myapp % npx dbos-cloud app deploy 2024-04-09 15:20:35 [info]: Loaded application name from package.json: myapp 2024-04-09 15:20:35 [info]: Submitting deploy request for myapp 2024-04-09 15:21:09 [info]: Submitted deploy request for myapp. Assigned version: 1712676035 2024-04-09 15:21:13 [info]: Waiting for myapp with version 1712676035 to be available 2024-04-09 15:21:21 [info]: Successfully deployed myapp! 2024-04-09 15:21:21 [info]: Access your application at https://meheller-myapp.cloud.dbos.dev/

The “Hello” application running in the DBOS Cloud counts every greeting. It uses the code you saw earlier.

The “Hello” application does illustrate some of the core features of DBOS Transact and the DBOS Cloud, but it’s so basic that it’s barely a toy. The Programming Quickstart adds a few more details, and it’s worth your time to go through it. You’ll learn how to use communicator functions to access third-party services (email, in this example) as well as how to compose reliable workflows. You’ll literally interrupt the workflow and restart it without re-sending the email: DBOS workflows always run to completion and each of their operations executes once and only once. That’s possible because DBOS persists the output of each step in your database.

Once you’ve understood the programming Quickstart, you’ll be ready to try out the two DBOS demo applications, which do rise to the level of being toys. Both demos use Next.js for their front ends, and both use DBOS workflows, transactions, and communicators.

The first demo, E-Commerce, is a web shopping and payment processing system. It’s worthwhile reading the Under the Covers section of the README in the demo’s repository to understand how it works and how you might want to upgrade it to, for example, use a real-world payment provider.

The second demo, YKY Social, simulates a simple social network, and uses TypeORM rather than Knex.js for its database code. It also uses Amazon S3 for profile photos. If you’re serious about using DBOS yourself, you should work though both demo applications.

I have to say that DBOS and DBOS Cloud look very interesting. Reliable execution and time-travel debugging, for example, are quite desirable. On the other hand, I wouldn’t want to build a real application on DBOS or DBOS Cloud at this point. I have lots of questions, starting with “How does it scale in practice?” and probably ending with “How much will it cost at X scale?”

I mentioned earlier that DBOS code looks weird but works. I would imagine that any programming shop considering writing an application on it would be discouraged or even repelled by the “it looks weird” part, as developers tend to be set in their ways until what they are doing no longer works.

I also have to point out that the current implementation of DBOS is very far from the system diagram you saw near the beginning of this review. Where’s the minimal kernel? DBOS currently runs on macOS, Linux, and Windows. None of those are minimal kernels. DBOS Cloud currently runs on AWS. Again, not a minimal kernel.

So, overall, DBOS is a tantalizing glimpse of something that may eventually turn out to be cool. It’s new and shiny, and it comes from smart people, but it will be awhile before it could possibly become a mainstream system.

—

Cost: Free with usage limits; paid plans require you to contact sales.

Platform: macOS, Linux, Windows, AWS.

Next read this:

Posted by Richy George on 16 April, 2024

Open-source database provider Qdrant has made available Qdrant Hybrid Cloud, a dedicated vector database to be offered in a managed hybrid cloud model.

Qdrant, the open-source foundation of both Qdrant Cloud and Qdrant Hybrid Cloud, is a vector similarity search engine and vector database written in Rust. Qdrant offers a set of features for performance optimization and can handle billions of vectors with scale and memory safety, the company said.

Qdrant said it lets businesses deploy vector databases across any cloud provider, on-prem data center, or edge location, thus ensuring performance, security, and cost efficiency for AI-driven applications. Vector databases have emerged as a critical component for building generative AI applications.

Qdrant Hybrid Cloud lets customers deploy a vector database in their chosen environment without sacrificing the benefits of a managed cloud service. In addition to running on Google Cloud Platform and Microsoft Azure, Qdrant Hybrid Cloud can run in Oracle Cloud Infrastructure, Red Hat OpenShift, Vultur, DigitalOcean, OVHcloud, Scaleway, Stackit, Civo, or any other private or public infrastructure with Kubernetes support.

Next read this:

Posted by Richy George on 9 April, 2024

Google Cloud is adding capabilities driven by its proprietary large language model, Gemini to its database offerings, which include Bigtable, Spanner, Memorystore for Redis, Firestore, CloudSQL for MySQL, and AlloyDB for PostgreSQL, the company announced at its annual Next conference.

The Gemini-driven capabilities, which are currently in public preview, include SQL generation, and AI assistance in managing and migrating databases.

Last year, the company added Duet AI, now rebranded to Gemini, in Spanner and its Database Migration Service.

The SQL generation capability can be accessed via the company’s SQL editor named Database Studio to be found inside Google’s Cloud Console.

As the name suggests, this capability allows developers to easily generate, summarize, and fix SQL code with intelligent code assistance, code completion, and guidance directly inside Database Studio, which in turn improves productivity, the company said, adding that Database Studio supports both MySQL and PostgreSQL dialects.

In addition, Database Studio comes with a context-aware chat interface that can take input in natural language to help build database applications faster, according to the company.

Google is not the only database provider that has added SQL code generation to its list of capabilities, analysts said.

“SQL code generation with assistance from generative AI has become one of the low-hanging fruits for generative AI over the past year,” said Tony Baer, principal analyst at dbInsight.

“The new breed of generative AI database code assistants should eventually have a key advantage over those assistants that cater to general-purpose languages, which is that they are database-specific and can therefore read the metadata of databases to not just form, but also optimize SQL code,” Baer explained.

In order to help manage databases better, the cloud service provider is adding a new feature called the Database Center, which will allow operators to manage an entire fleet of databases from a single pane.

Database Center also provides intelligent dashboards to proactively assess availability, data protection, security, and compliance posture, the company said.

Further, the company is infusing Gemini into the Database Center via a natural language-based chat window that will allow enterprise teams to interact with the databases and find more insights about them.

The chat window also can be used to generate troubleshooting tips for database-related issues, the company said.

Google’s idea to have a single pane to manage multiple databases takes inspiration from Oracle, according to Baer.

While Oracle provides the capability for multiple instances of the same databases, which is multimodal, Google extends the capability to a heterogenous collection of databases, Baer said.

“Having central control means that enterprises can be consistent with their policies for security, data access, and service level agreements (SLAs). That’s a major step toward the simplification that we expect from the cloud,” the principal analyst explained.

Google has also extended Gemini to its Database Migration Service, which earlier had support for Duet AI.

Gemini’s improved features will make the service better, the company said, adding that Gemini can help convert database-resident code, such as stored procedures, functions to PostgreSQL dialect.

Additionally, Gemini-powered database migration also focuses on explaining the translation of the code with a side-by-side comparison of dialects, along with detailed explanations of the code and recommendations.

The focus on explaining the code has been planned to help upskill and retrain SQL developers, the company said.

In addition to powering databases with Gemini, Google has added new features to AlloyDB AI.

AlloyDB AI, which was introduced last year as part of its AlloyDB for PostgreSQL database service, is a suite of integrated capabilities targeted at helping developers build generative AI-based applications using real-time data.

The new features include allowing generative AI-based applications to query data with natural language and a new type of database view.

The enablement of querying data with natural language will allow AI-based applications to respond to more sets of questions from enterprise teams, the company said.

On the other hand, the new type of database view — parameterized secure view — allows enterprise teams to secure data based on the end-users’ context.

AlloyDB AI can be downloaded using AlloyDB Omni, which has been made generally available. AlloyDB Omni is a downloadable version of Google Cloud’s PostgreSQL-compatible database service.

Other updates include the addition of Bigtable Data Boost, similar to Spanner Data Boost released last year, and performance enhancements to Memorystore for Redis.

Next read this:

Posted by Richy George on 4 April, 2024

Once you get past the chatbot hype, it’s clear that generative AI is a useful tool, providing a way of navigating applications and services using natural language. By tying our large language models (LLMs) to specific data sources, we can avoid the risks that come with using nothing but training data.

While it is possible to fine-tune an LLM on specific data, that can be expensive and time-consuming, and it can also lock you into a specific time frame. If you want accurate, timely responses, you need to use retrieval-augmented generation (RAG) to work with your data.

The neural networks that power LLMs are, at heart, sophisticated vector search engines that extrapolate the paths of semantic vectors in an n-dimensional space, where the higher the dimensionality, the more complex the model. So, if you’re going to use RAG, you need to have a vector representation of your data that can both build prompts and seed the vectors used to generate output from an LLM. That’s why it’s one of the techniques that powers Microsoft’s various Copilots.

I’ve talked about these approaches before, looking at Azure AI Studio’s Prompt Flow, Microsoft’s intelligent agent framework Semantic Kernel, the Power Platform’s Open AI-powered boost in its re-engineered Q and A Maker Copilot Studio, and more. In all those approaches, there’s one key tool you need to bring to your applications: a vector database. This allows you to use the embedding tools used by an LLM to generate text vectors for your content, speeding up search and providing the necessary seeds to drive a RAG workflow. At the same time, RAG and similar approaches ensure that your enterprise data stays in your servers and isn’t exposed to the wider world beyond queries that are protected using role-based access controls.

While Microsoft has been adding vector search and vector index capabilities to its own databases, as well as supporting third-party vector stores in Azure, one key database technology has been missing from the RAG story. These missing databases are graph databases, a NoSQL approach that provides an easy route to a vector representation of your data with the added bonus of encoding relationships in the vertices that link the graph nodes that store your data.

Graph databases like this shouldn’t be confused with the Microsoft Graph. It uses a node model for queries, but it doesn’t use it to infer relationships between nodes. Graph databases are a more complex tool, and although they can be queried using GraphQL, they have a much more complex query process, using tools such as the Gremlin query engine.

One of the best-known graph databases is Neo4j, which recently announced support for the enterprise version of its cloud-hosted service, Aura, on Azure. Available in the Azure Marketplace, it’s a SaaS version of the familiar on-premises tool, allowing you to get started with data without having to spend time configuring your install. Two versions are available, with different memory options built on reserved capacity so you don’t need to worry about instances not being available when you need them. It’s not cheap, but it does simplify working with large amounts of data, saving a lot of time when working with large-scale data lakes in Fabric.

One key feature of Neo4J is the concept of the knowledge graph, linking unstructured information in nodes into a structured graph. This way you can quickly see relationships between, say, a product manual and the whole bill of materials that goes into the product. Instead of pointing out a single part that needs to be replaced for a fix, you have a complete dependency graph that shows what it affects and what’s necessary to make the fix.

A tool like Neo4j that can sit on top of a large-scale data lake like Microsoft’s Fabric gives you another useful way to build out the information sources for a RAG application. Here, you can use the graph visualization tool that comes as part of Neo4j to explore the complexities of your lakehouses, generating the underlying links between your data and giving you a more flexible and understandable view of your data.

One important aspect of a knowledge graph is that you don’t need to use it all. You can use the graph relationships to quickly filter out information you don’t need for your application. This reduces complexity and speeds up searches. By ensuring that the resulting vectors and prompts are confined to a strict set of relationships, it reduces the risks of erroneous outputs from your LLM.

There’s even the prospect of using LLMs to help generate those knowledge graphs. The summarization tools identify specific entities within the graph database and then provide the links needed to define relationships. This approach lets you quickly extend existing data models into graphs, making them more useful as part of an AI-powered application. At the same time, you can use the Azure Open AI APIs to add a set of embeddings to your data in order to use vector search to explore your data as part of an agent-style workflow using LangChain or Semantic Kernel.

The real benefit of using a graph database with a large language model comes with a variation on the familiar RAG approach, GraphRAG. Developed by Microsoft Research, GraphRAG uses knowledge graphs to improve grounding in private data, going beyond the capabilities of a standard RAG approach to use the knowledge graph to link related pieces of information and generate complex answers.

One point to understand when working with large amounts of private data using an LLM is the size of the context window. In practice, it’s too computationally expensive to use the number of tokens needed to deliver a lot of data as part of a prompt. You need a RAG approach to get around this limitation, and GraphRAG goes further, letting you deliver a lot more context around your query.

The original GraphRAG research uses a database of news stories, which a traditional RAG fails to parse effectively. However, with a knowledge graph, entities and relationships are relatively simple to extract from the sources, allowing the application to select and summarize news stories that contain the search terms, by providing the LLM with much more context. This is because the graph database structure naturally clusters similar semantic entities, while providing deeper context in the relationships encoded in the vertices between those nodes.

Instead of searching for like terms, much like a traditional search engine, GraphRAG allows you to extract information from the entire dataset you’re using, whether transcripts of support calls or all the documents associated with a specific project.

Although the initial research uses automation to build and cluster the knowledge graph, there is the opportunity to use Neo4j to work with massive data lakes in the Microsoft Fabric, providing a way to visualize that data so that data scientists and business analysts can create their own clusters, which can help produce GraphRAG applications that are driven by what matters to your business as much as by the underlying patterns in the data.

Having a graph database like Neo4j in the Azure Marketplace gives you a tool that helps you understand and visualize the relationships in your data in a way that supports both humans and machines. Integrating it with Fabric should help build large-scale, context-aware, LLM-powered applications, letting you get grounded results from your data in a way that standard RAG approaches can miss. It’ll be interesting to see if Microsoft starts implementing GraphRAG in its own Prompt Flow LLM tool.

Next read this:

Posted by Richy George on 27 March, 2024

Microsoft has announced a private preview of Copilot in Azure SQL Database, an AI assistant that improves productivity in the Azure portal by offering natural language to SQL conversion, along with self-help for database administration.

Microsoft announced the preview on March 21. To sign up for the preview, users can request access.

Copilot in Azure SQL Database enables the Azure portal query editor to translate natural language queries to SQL, making database interactions more intuitive, Microsoft said. Plus, Azure Copilot integration adds Azure SQL Database skills into Microsoft Copilot for Azure, providing self-guided assistance that enables users to manage databases and solve issues independently.

Copilot in Azure SQL Database integrates data and formulates responses using public documentation, database schema, dynamic management views, catalog views, and Azure supportability diagnostics, Microsoft said.

Within the Azure portal query editor, Copilot for Azure SQL Database uses table and view names, column names, primary key, and foreign key metadata to generate T-SQL code. Users then can review and execute the code suggestion. The Copilot also can answer questions with prompts like “Which real estate agent has listed more than two properties for sale?” or “Show me a pivot summary table that displays the number of properties sold in each year from 2020 to 2023,” Microsoft said.

Next read this:

Posted by Richy George on 26 March, 2024

Vertex AI Studio is an online environment for building AI apps, featuring Gemini, Google’s own multimodal generative AI model that can work with text, code, audio, images, and video. In addition to Gemini, Vertex AI provides access to more than 40 proprietary models and more than 60 open source models in its Model Garden, for example the proprietary PaLM 2, Imagen, and Codey models from Google Research, open source models like Llama 2 from Meta, and Claude 2 and Claude 3 from Anthropic. Vertex AI also offers pre-trained APIs for speech, natural language, translation, and vision.

Vertex AI supports prompt engineering, hyper-parameter tuning, retrieval-augmented generation (RAG), and model tuning. You can tune foundation models with your own data, using tuning options such as adapter tuning and reinforcement learning from human feedback (RLHF), or perform style and subject tuning for image generation.

Vertex AI Extensions connect models to real-world data and real-time actions. Vertex AI allows you to work with models both in the Google Cloud console and via APIs in Python, Node.js, Java, and Go.

Competitive products include Amazon Bedrock, Azure AI Studio, LangChain/LangSmith, LlamaIndex, Poe, and the ChatGPT GPT Builder. The technical levels, scope, and programming language support of these products vary.

Vertex AI Studio is a Google Cloud console tool for building and testing generative AI models. It allows you to design and test prompts and customize foundation models to meet your application’s needs.

Foundation models are another term for the generative AI models found in Vertex AI. Calling them foundation models emphasizes the fact that they can be customized with your data for the specialized purposes of your application. They can generate text, chat, image, code, video, multimodal data, and embeddings.

Embeddings are vector representations of other data, for example text. Search engines often use vector embeddings, a cosine metric, and a nearest-neighbor algorithm to find text that is relevant (similar) to a query string.

The proprietary Google generative AI models available in Vertex AI include:

Vertex AI Studio allows you to test models using prompt samples. The prompt galleries are organized by the type of model (multimodal, text, vision, or speech) and the task being demonstrated, for example “summarize key insights from a financial report table” (text) or “read the text from this handwritten note image” (multimodal).

Vertex AI also helps you to design and save your own prompts. The types of prompt are broken down by purpose, for example text generation versus code generation and single-shot versus chat. Iterating on your prompts is a surprisingly powerful way of customizing a model to produce the output you want, as we’ll discuss below.

When prompt engineering isn’t enough to coax a model into producing the desired output, and you have a training data set in a suitable format, you can take the next step and tune a foundation model in one of several ways: supervised tuning, RLHF tuning, or distillation. Again, we’ll discuss this in more detail later on in this review.

The Vertex AI Studio speech tool can convert speech to text and text to speech. For text to speech you can choose your preferred voice and control its speed. For speech to text, Vertex AI Studio uses the Chirp model, but has length and file format limits. You can circumvent those by using the Cloud Speech-to-Text Console instead.

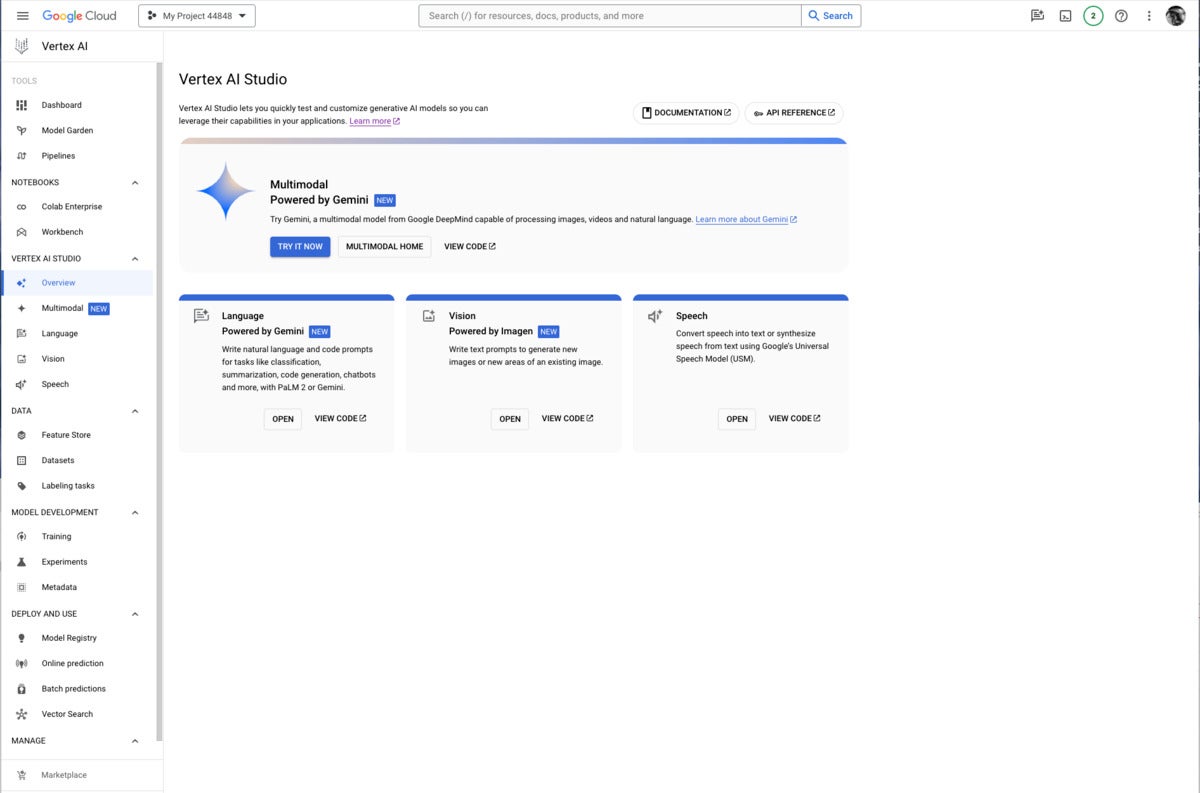

Google Vertex AI Studio overview console, emphasizing Google’s newest proprietary generative AI models. Note the use of Google Gemini for multimodal AI, PaLM2 or Gemini for language AI, Imagen for vision (image generation and infill), and the Universal Speech Model for speech recognition and synthesis.

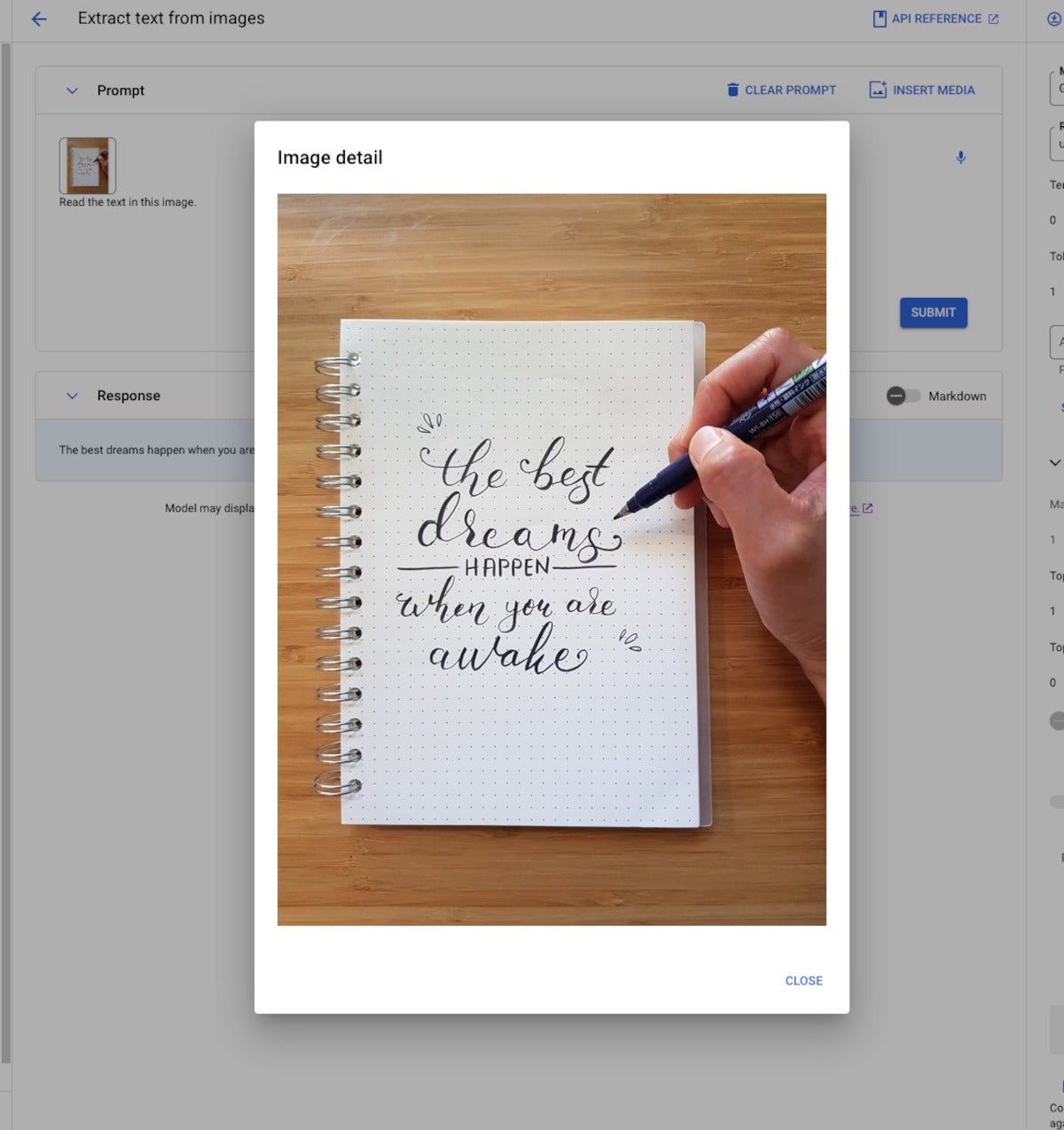

Multimodal generative AI demonstration from Vertex AI. The model, Gemini Pro Vision, is able to read the message from the image despite the elaborate calligraphy.

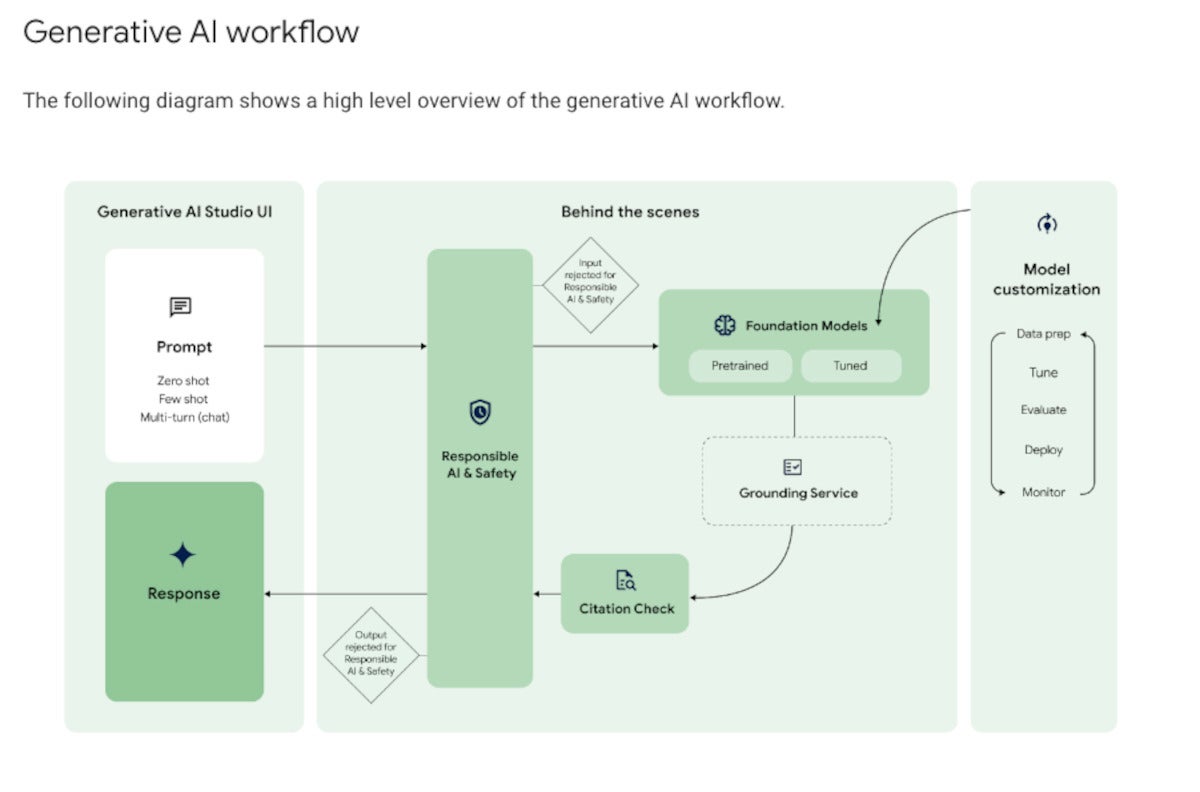

As you can see in the diagram below, Google Vertex AI’s generative AI workflow is a bit more complicated than simply throwing a prompt over the wall and getting a response back. Google’s responsible AI and safety filter applies both to the input and output, shielding the model from malicious prompts and the user from malicious responses.

The foundation model that processes the query can be pre-trained or tuned. Model tuning, if desired, can be performed using several methods, all of which are out-of-band for the query/response workflow and quite time-consuming.

If grounding is required, it’s applied here. The diagram shows the grounding service after the model in the flow; that’s not exactly how RAG works, as I explained in January. Out-of-band, you build your vector database. In-band, you generate an embedding vector for the query, use it to perform a similarity search against the vector database, and finally you include what you’ve retrieved from the vector database as an augmentation to the original query and pass it to the model.

At this point, the model generates answers, possibly based on multiple documents. The workflow allows for the inclusion of citations before sending the response back to the user through the safety filter.

The generative AI workflow typically starts with prompting by the user. On the back end, the prompt passes through a safety filter to pre-trained or tuned foundation models, optionally using a grounding service for RAG. After a citation check, the reply passes back through the safety filter and to the user.

As you might expect from the way RAG works, Vertex AI requires you to take a few steps to enable RAG. First, you need to “onboard to Vertex AI Search and Conversation,” a matter of a few clicks and a few minutes of waiting. Then you need to create an AI Search data store, which can be accomplished by crawling websites, importing data from a BigQuery table, importing data from a Cloud Storage bucket (PDF, HTML, TXT, JSONL, CSV, DOCX, or PPTX formats), or by calling an API.

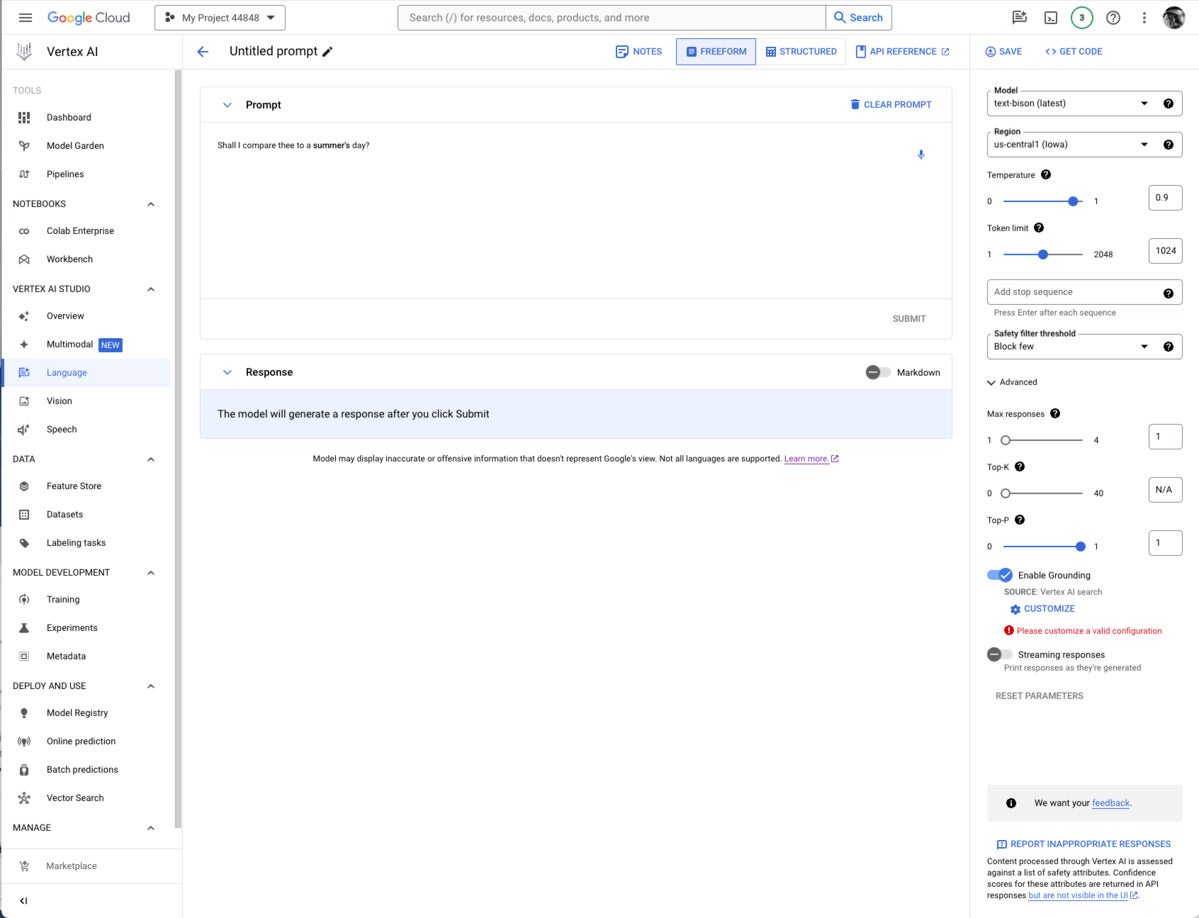

Finally, you need to set up a prompt with a model that supports RAG (currently only text-bison and chat-bison, both PaLM 2 language models) and configure it to use your AI Search and Conversation data store. If you are using the Vertex AI console, this setup is in the advanced section of the prompt parameters, as shown in the first screenshot below. If you are using the Vertex AI API, this setup is in the groundingConfig section of the parameters:

{

"instances": [

{ "prompt": "PROMPT"}

],

"parameters": {

"temperature": TEMPERATURE,

"maxOutputTokens": MAX_OUTPUT_TOKENS,

"topP": TOP_P,

"topK": TOP_K,

"groundingConfig": {

"sources": [

{

"type": "VERTEX_AI_SEARCH",

"vertexAiSearchDatastore": "VERTEX_AI_SEARCH_DATA_STORE"

}

]

}

}

}

If you’re constructing a prompt for a model that supports grounding, the Enable Grounding toggle at the right, under Advanced, will be enabled, and you can click it, as I have here. Clicking on Customize brings up another right-hand panel where you can select Vertex AI Search from the drop-down list and fill in the path to the Vertex AI data store.

Note that grounding or RAG may or may not be needed, depending on how and when the model was trained.

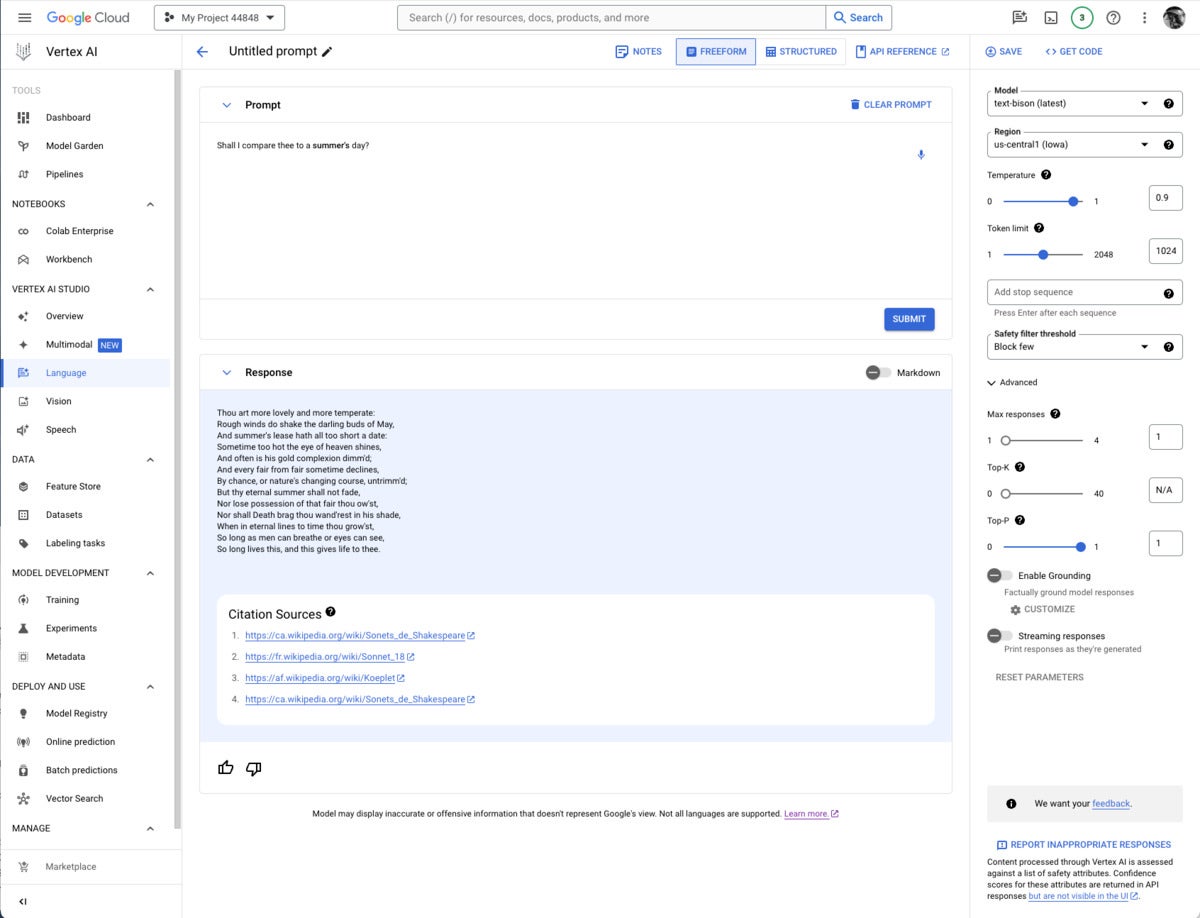

It’s usually worth checking to see whether you need grounding for any given prompt/model pair. I thought I might need to add the poems section of the Poetry.org site to get a good completion for “Shall I compare thee to a summer’s day?” But as you can see above, the text-bison model already knew the sonnet from four sources it could (and did) cite.

Google’s proprietary models offer some of the added value of the Vertex AI site. Gemini was unique in being a multimodal model (as well as a text and code generation model) as recently as a few weeks before I wrote this. Then OpenAI GPT-4 incorporated DALL-E, which allowed it to generate text or images. Currently, Gemini can generate text from images and videos, but GPT-4/DALL-E can’t.

Gemini versions currently offered on Vertex AI include Gemini Pro, a language model with “the best performing Gemini model with features for a wide range of tasks;” Gemini Pro Vision, a multimodal model “created from the ground up to be multimodal (text, images, videos) and to scale across a wide range of tasks;” and Gemma, “open checkpoint variants of Google DeepMind’s Gemini model suited for a variety of text generation tasks.”

Additional Gemini versions have been announced: Gemini 1.0 Ultra, Gemini Nano (to run on devices), and Gemini 1.5 Pro, a mixture-of-experts (MoE) mid-size multimodal model, optimized for scaling across a wide range of tasks, that performs at a similar level to Gemini 1.0 Ultra. According to Demis Hassabis, CEO and co-founder of Google DeepMind, Gemini 1.5 Pro comes with a standard 128,000 token context window, but a limited group of customers can try it with a context window of up to 1 million tokens via Vertex AI in private preview.

Imagen 2 is a text-to-image diffusion model from Google Brain Research that Google says has “an unprecedented degree of photorealism and a deep level of language understanding.” It’s competitive with DALL-E 3, Midjourney 6, and Adobe Firefly 2, among others.

Chirp is a version of a Universal Speech Model that has over 2B parameters and can transcribe in over 100 languages in a single model. It can turn audio speech to formatted text, caption videos for subtitles, and transcribe audio content for entity extraction and content classification.

Codey exists in versions for code completion (code-gecko), code generation (̉code-bison), and code chat (codechat-bison). The Codey APIs support the Go, GoogleSQL, Java, JavaScript, Python, and TypeScript languages, and Google Cloud CLI, Kubernetes Resource Model (KRM), and Terraform infrastructure as code. Codey competes with GitHub Copilot, StarCoder 2, CodeLlama, LocalLlama, DeepSeekCoder, CodeT5+, CodeBERT, CodeWhisperer, Bard, and various other LLMs that have been fine-tuned on code such as OpenAI Codex, Tabnine, and ChatGPTCoding.

PaLM 2 exists in versions for text (text-bison and text-unicorn), chat (̉chat-bison), and security-specific tasks (sec-palm, currently only available by invitation). PaLM 2 text-bison is good for summarization, question answering, classification, sentiment analysis, and entity extraction. PaLM 2 chat-bison is fine-tuned to conduct natural conversation, for example to perform customer service and technical support or serve as a conversational assistant for websites. PaLM 2 text-unicorn, the largest model in the PaLM family, excels at complex tasks such as coding and chain-of-thought (CoT).

Google also provides embedding models for text (textembedding-gecko and textembedding-gecko-multilingual) and multimodal (multimodalembedding). Embeddings plus a vector database (Vertex AI Search) allow you to implement semantic or similarity search and RAG, as described above.

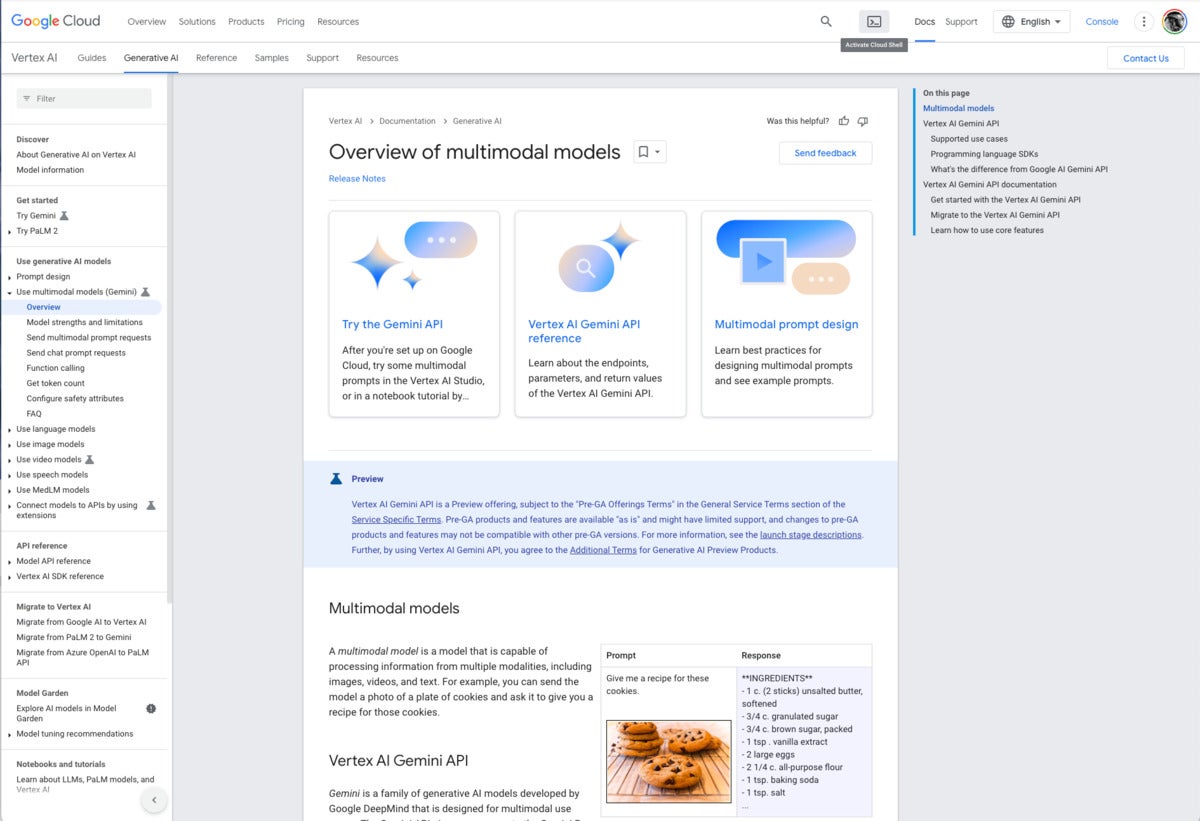

Vertex AI documentation overview of multimodal models. Note the example at the lower right. The text prompt “Give me a recipe for these cookies” and an unlabeled picture of chocolate-chip cookies causes Gemini to respond with an actual recipe for chocolate-chip cookies.

In addition to Google’s proprietary models, the Model Garden (documentation) currently offers roughly 90 open-source models and 38 task-specific solutions. In general, the models have model cards. The Google models are available through Vertex AI APIs and Google Colab as well as in the Vertex AI console. The APIs are billed on a usage basis.

The other models are typically available in Colab Enterprise and can be deployed as an endpoint. Note that endpoints are deployed on serious instances with accelerators (for example 96 CPUs and 8 GPUs), and therefore accrue significant charges as long as they are deployed.

Foundation models offered include Claude 3 Opus (coming soon), Claude 3 Sonnet (preview), Claude 3 Haiku (coming soon), Llama 2, and Stable Diffusion v1-5. Fine-tunable models include PyTorch-ZipNeRF for 3D reconstruction, AutoGluon for tabular data, Stable Diffusion LoRA (MediaPipe) for text to image generation, and ̉̉MoViNet Video Action Recognition.

The Google AI prompt design strategies page does a decent and generally vendor-neutral job of explaining how to design prompts for generative AI. It emphasizes clarity, specificity, including examples (few-shot learning), adding contextual information, using prefixes for clarity, letting models complete partial inputs, breaking down complex prompts into simpler components, and experimenting with different parameter values to optimize results.

Let’s look at three examples, one each for multimodal, text, and vision. The multimodal example is interesting because it uses two images and a text question to get an answer.

Page 2

Here the prompt asks the price of what’s shown in the first image. The Gemini Pro Vision model has to match up the fruit in the first image to the second image, and read the hand-written price tag in the second image to come up with the answer, $4 each. The next two screenshots show details of the two images.

The naive reader might think that these are green apples. Nevertheless if you view the next screenshot you’ll see that they are labeled Asian pears.

The fruits here are distinct enough that you aren’t likely to confuse them, even if Asian pears are unfamiliar, and despite the pears having wrappings in this image but not in the previous image.

This text extraction example using the Gemini Pro model asks for a JSON format answer extracted from plain text, and offers a one-shot example for guidance in the middle Examples pane. The inferred result in the Test pane is correct.

Because I’m rather bloody-minded when I test, I used an image of my own, my back yard after a snowstorm. OK, it looks like my neighbor’s garage was misidentified as a house, but the first generated caption isn’t bad.

It’s almost always worthwhile to try prompt engineering first, but if that fails, the next step to customize a base model for your own purposes is model tuning. The Google AI guidance on model tuning and model tuning with AI Studio is very good, and to the point.

Currently Vertex AI only supports supervised fine-tuning of two text foundation models, Gemini 1.0 Pro and text-bison-001. While you can sometimes get by with as few as 20 examples when you’re doing supervised learning for fine-tuning, the usual recommendations from Google are listed in the table below.

|

Task |

No. of examples in data set |

|

Classification |

100+ |

|

Summarization |

100-500+ |

|

Document search |

100+ |

—

If you’d like to try out fine-tuning for free, you can run it in Colab using this Python Quickstart.

There are two supported methods for fine-tuning text models in AI Studio, supervised tuning and RLHF tuning. Google recommends supervised tuning for classification, sentiment analysis, entity extraction, summarization of content that’s not complex, and domain-specific queries. Google recommends RLHF tuning for question answering, summarization of complex content, and content creation. However, supervised tuning is the only option for code models.

You can also tune embedding models and create distilled text models. Distilled text models use a large, capable teacher model and a labeled or an unlabeled training data set to train a smaller but more accurate student model.

If all of the above fails, your next step might be continued pre-training. The good news about that is that it uses unlabeled data. The bad news is that it requires lots of exemplars, takes days to run, and can be quite expensive.

Overall, Vertex AI Studio is a promising product that could potentially compete strongly with Amazon Bedrock and Azure AI Studio. On the other hand, Google has been busy shooting itself in the foot. The company mismanaged its Gemini Image rollout to the point where co-founder Sergey Brin returned from wherever he has been hiding to say “We definitely messed up on the image generation.” I won’t repeat my rant about Google’s history of eating its young from my review of Project IDX, but you can read it at the end of that article.

There are lots of good things about Vertex AI Studio, including its use of Google’s own models, its rapid adoption and deployment of new models from other vendors, and its straightforward support for RAG and model tuning. Meanwhile, why are the Generative AI Extensions—potentially the most useful facility of Vertex AI Studio, and the piece that competes with OpenAI GPTs—buried in a private preview?

As far as which AI app building platform you should choose, if you’re already heavily invested in the Google Cloud, then using Google Vertex AI Studio for building AI apps is probably a no-brainer, as long as you can get access to all the models and capabilities that you need, rather than be told that you can’t have them because they are in private preview.

Given Google’s investment in its cloud platform, I don’t seriously expect Vertex AI Studio to be killed outright in the next couple of years, but I wouldn’t be at all surprised by yet another rebranding.

—

Cost: Cost is based on usage. See https://cloud.google.com/vertex-ai/pricing#generative_ai_models.

Platform: Google Cloud Platform.

Next read this:

Posted by Richy George on 26 March, 2024

Vertex AI Studio is an online environment for building AI apps, featuring Gemini, Google’s own multimodal generative AI model that can work with text, code, audio, images, and video. In addition to Gemini, Vertex AI provides access to more than 40 proprietary models and more than 60 open source models in its Model Garden, for example the proprietary PaLM 2, Imagen, and Codey models from Google Research, open source models like Llama 2 from Meta, and Claude 2 and Claude 3 from Anthropic. Vertex AI also offers pre-trained APIs for speech, natural language, translation, and vision.

Vertex AI supports prompt engineering, hyper-parameter tuning, retrieval-augmented generation (RAG), and model tuning. You can tune foundation models with your own data, using tuning options such as adapter tuning and reinforcement learning from human feedback (RLHF), or perform style and subject tuning for image generation.

Vertex AI Extensions connect models to real-world data and real-time actions. Vertex AI allows you to work with models both in the Google Cloud console and via APIs in Python, Node.js, Java, and Go.

Competitive products include Amazon Bedrock, Azure AI Studio, LangChain/LangSmith, LlamaIndex, Poe, and the ChatGPT GPT Builder. The technical levels, scope, and programming language support of these products vary.

Vertex AI Studio is a Google Cloud console tool for building and testing generative AI models. It allows you to design and test prompts and customize foundation models to meet your application’s needs.

Foundation models are another term for the generative AI models found in Vertex AI. Calling them foundation models emphasizes the fact that they can be customized with your data for the specialized purposes of your application. They can generate text, chat, image, code, video, multimodal data, and embeddings.

Embeddings are vector representations of other data, for example text. Search engines often use vector embeddings, a cosine metric, and a nearest-neighbor algorithm to find text that is relevant (similar) to a query string.

The proprietary Google generative AI models available in Vertex AI include:

Vertex AI Studio allows you to test models using prompt samples. The prompt galleries are organized by the type of model (multimodal, text, vision, or speech) and the task being demonstrated, for example “summarize key insights from a financial report table” (text) or “read the text from this handwritten note image” (multimodal).

Vertex AI also helps you to design and save your own prompts. The types of prompt are broken down by purpose, for example text generation versus code generation and single-shot versus chat. Iterating on your prompts is a surprisingly powerful way of customizing a model to produce the output you want, as we’ll discuss below.

When prompt engineering isn’t enough to coax a model into producing the desired output, and you have a training data set in a suitable format, you can take the next step and tune a foundation model in one of several ways: supervised tuning, RLHF tuning, or distillation. Again, we’ll discuss this in more detail later on in this review.

The Vertex AI Studio speech tool can convert speech to text and text to speech. For text to speech you can choose your preferred voice and control its speed. For speech to text, Vertex AI Studio uses the Chirp model, but has length and file format limits. You can circumvent those by using the Cloud Speech-to-Text Console instead.

Google Vertex AI Studio overview console, emphasizing Google’s newest proprietary generative AI models. Note the use of Google Gemini for multimodal AI, PaLM2 or Gemini for language AI, Imagen for vision (image generation and infill), and the Universal Speech Model for speech recognition and synthesis.

Multimodal generative AI demonstration from Vertex AI. The model, Gemini Pro Vision, is able to read the message from the image despite the elaborate calligraphy.

As you can see in the diagram below, Google Vertex AI’s generative AI workflow is a bit more complicated than simply throwing a prompt over the wall and getting a response back. Google’s responsible AI and safety filter applies both to the input and output, shielding the model from malicious prompts and the user from malicious responses.

The foundation model that processes the query can be pre-trained or tuned. Model tuning, if desired, can be performed using several methods, all of which are out-of-band for the query/response workflow and quite time-consuming.

If grounding is required, it’s applied here. The diagram shows the grounding service after the model in the flow; that’s not exactly how RAG works, as I explained in January. Out-of-band, you build your vector database. In-band, you generate an embedding vector for the query, use it to perform a similarity search against the vector database, and finally you include what you’ve retrieved from the vector database as an augmentation to the original query and pass it to the model.

At this point, the model generates answers, possibly based on multiple documents. The workflow allows for the inclusion of citations before sending the response back to the user through the safety filter.

The generative AI workflow typically starts with prompting by the user. On the back end, the prompt passes through a safety filter to pre-trained or tuned foundation models, optionally using a grounding service for RAG. After a citation check, the reply passes back through the safety filter and to the user.

As you might expect from the way RAG works, Vertex AI requires you to take a few steps to enable RAG. First, you need to “onboard to Vertex AI Search and Conversation,” a matter of a few clicks and a few minutes of waiting. Then you need to create an AI Search data store, which can be accomplished by crawling websites, importing data from a BigQuery table, importing data from a Cloud Storage bucket (PDF, HTML, TXT, JSONL, CSV, DOCX, or PPTX formats), or by calling an API.

Finally, you need to set up a prompt with a model that supports RAG (currently only text-bison and chat-bison, both PaLM 2 language models) and configure it to use your AI Search and Conversation data store. If you are using the Vertex AI console, this setup is in the advanced section of the prompt parameters, as shown in the first screenshot below. If you are using the Vertex AI API, this setup is in the groundingConfig section of the parameters:

{

"instances": [

{ "prompt": "PROMPT"}

],

"parameters": {

"temperature": TEMPERATURE,

"maxOutputTokens": MAX_OUTPUT_TOKENS,

"topP": TOP_P,

"topK": TOP_K,

"groundingConfig": {

"sources": [

{

"type": "VERTEX_AI_SEARCH",

"vertexAiSearchDatastore": "VERTEX_AI_SEARCH_DATA_STORE"

}

]

}

}

}

If you’re constructing a prompt for a model that supports grounding, the Enable Grounding toggle at the right, under Advanced, will be enabled, and you can click it, as I have here. Clicking on Customize brings up another right-hand panel where you can select Vertex AI Search from the drop-down list and fill in the path to the Vertex AI data store.

Note that grounding or RAG may or may not be needed, depending on how and when the model was trained.

It’s usually worth checking to see whether you need grounding for any given prompt/model pair. I thought I might need to add the poems section of the Poetry.org site to get a good completion for “Shall I compare thee to a summer’s day?” But as you can see above, the text-bison model already knew the sonnet from four sources it could (and did) cite.

Google’s proprietary models offer some of the added value of the Vertex AI site. Gemini was unique in being a multimodal model (as well as a text and code generation model) as recently as a few weeks before I wrote this. Then OpenAI GPT-4 incorporated DALL-E, which allowed it to generate text or images. Currently, Gemini can generate text from images and videos, but GPT-4/DALL-E can’t.

Gemini versions currently offered on Vertex AI include Gemini Pro, a language model with “the best performing Gemini model with features for a wide range of tasks;” Gemini Pro Vision, a multimodal model “created from the ground up to be multimodal (text, images, videos) and to scale across a wide range of tasks;” and Gemma, “open checkpoint variants of Google DeepMind’s Gemini model suited for a variety of text generation tasks.”

Additional Gemini versions have been announced: Gemini 1.0 Ultra, Gemini Nano (to run on devices), and Gemini 1.5 Pro, a mixture-of-experts (MoE) mid-size multimodal model, optimized for scaling across a wide range of tasks, that performs at a similar level to Gemini 1.0 Ultra. According to Demis Hassabis, CEO and co-founder of Google DeepMind, Gemini 1.5 Pro comes with a standard 128,000 token context window, but a limited group of customers can try it with a context window of up to 1 million tokens via Vertex AI in private preview.

Imagen 2 is a text-to-image diffusion model from Google Brain Research that Google says has “an unprecedented degree of photorealism and a deep level of language understanding.” It’s competitive with DALL-E 3, Midjourney 6, and Adobe Firefly 2, among others.

Chirp is a version of a Universal Speech Model that has over 2B parameters and can transcribe in over 100 languages in a single model. It can turn audio speech to formatted text, caption videos for subtitles, and transcribe audio content for entity extraction and content classification.

Codey exists in versions for code completion (code-gecko), code generation (̉code-bison), and code chat (codechat-bison). The Codey APIs support the Go, GoogleSQL, Java, JavaScript, Python, and TypeScript languages, and Google Cloud CLI, Kubernetes Resource Model (KRM), and Terraform infrastructure as code. Codey competes with GitHub Copilot, StarCoder 2, CodeLlama, LocalLlama, DeepSeekCoder, CodeT5+, CodeBERT, CodeWhisperer, Bard, and various other LLMs that have been fine-tuned on code such as OpenAI Codex, Tabnine, and ChatGPTCoding.

PaLM 2 exists in versions for text (text-bison and text-unicorn), chat (̉chat-bison), and security-specific tasks (sec-palm, currently only available by invitation). PaLM 2 text-bison is good for summarization, question answering, classification, sentiment analysis, and entity extraction. PaLM 2 chat-bison is fine-tuned to conduct natural conversation, for example to perform customer service and technical support or serve as a conversational assistant for websites. PaLM 2 text-unicorn, the largest model in the PaLM family, excels at complex tasks such as coding and chain-of-thought (CoT).

Google also provides embedding models for text (textembedding-gecko and textembedding-gecko-multilingual) and multimodal (multimodalembedding). Embeddings plus a vector database (Vertex AI Search) allow you to implement semantic or similarity search and RAG, as described above.

Vertex AI documentation overview of multimodal models. Note the example at the lower right. The text prompt “Give me a recipe for these cookies” and an unlabeled picture of chocolate-chip cookies causes Gemini to respond with an actual recipe for chocolate-chip cookies.

In addition to Google’s proprietary models, the Model Garden (documentation) currently offers roughly 90 open-source models and 38 task-specific solutions. In general, the models have model cards. The Google models are available through Vertex AI APIs and Google Colab as well as in the Vertex AI console. The APIs are billed on a usage basis.

The other models are typically available in Colab Enterprise and can be deployed as an endpoint. Note that endpoints are deployed on serious instances with accelerators (for example 96 CPUs and 8 GPUs), and therefore accrue significant charges as long as they are deployed.

Foundation models offered include Claude 3 Opus (coming soon), Claude 3 Sonnet (preview), Claude 3 Haiku (coming soon), Llama 2, and Stable Diffusion v1-5. Fine-tunable models include PyTorch-ZipNeRF for 3D reconstruction, AutoGluon for tabular data, Stable Diffusion LoRA (MediaPipe) for text to image generation, and ̉̉MoViNet Video Action Recognition.

The Google AI prompt design strategies page does a decent and generally vendor-neutral job of explaining how to design prompts for generative AI. It emphasizes clarity, specificity, including examples (few-shot learning), adding contextual information, using prefixes for clarity, letting models complete partial inputs, breaking down complex prompts into simpler components, and experimenting with different parameter values to optimize results.

Let’s look at three examples, one each for multimodal, text, and vision. The multimodal example is interesting because it uses two images and a text question to get an answer.

Page 2

Here the prompt asks the price of what’s shown in the first image. The Gemini Pro Vision model has to match up the fruit in the first image to the second image, and read the hand-written price tag in the second image to come up with the answer, $4 each. The next two screenshots show details of the two images.

The naive reader might think that these are green apples. Nevertheless if you view the next screenshot you’ll see that they are labeled Asian pears.

The fruits here are distinct enough that you aren’t likely to confuse them, even if Asian pears are unfamiliar, and despite the pears having wrappings in this image but not in the previous image.

This text extraction example using the Gemini Pro model asks for a JSON format answer extracted from plain text, and offers a one-shot example for guidance in the middle Examples pane. The inferred result in the Test pane is correct.

Because I’m rather bloody-minded when I test, I used an image of my own, my back yard after a snowstorm. OK, it looks like my neighbor’s garage was misidentified as a house, but the first generated caption isn’t bad.

It’s almost always worthwhile to try prompt engineering first, but if that fails, the next step to customize a base model for your own purposes is model tuning. The Google AI guidance on model tuning and model tuning with AI Studio is very good, and to the point.

Currently Vertex AI only supports supervised fine-tuning of two text foundation models, Gemini 1.0 Pro and text-bison-001. While you can sometimes get by with as few as 20 examples when you’re doing supervised learning for fine-tuning, the usual recommendations from Google are listed in the table below.

|

Task |

No. of examples in data set |

|

Classification |

100+ |

|

Summarization |

100-500+ |

|

Document search |

100+ |

—

If you’d like to try out fine-tuning for free, you can run it in Colab using this Python Quickstart.

There are two supported methods for fine-tuning text models in AI Studio, supervised tuning and RLHF tuning. Google recommends supervised tuning for classification, sentiment analysis, entity extraction, summarization of content that’s not complex, and domain-specific queries. Google recommends RLHF tuning for question answering, summarization of complex content, and content creation. However, supervised tuning is the only option for code models.

You can also tune embedding models and create distilled text models. Distilled text models use a large, capable teacher model and a labeled or an unlabeled training data set to train a smaller but more accurate student model.

If all of the above fails, your next step might be continued pre-training. The good news about that is that it uses unlabeled data. The bad news is that it requires lots of exemplars, takes days to run, and can be quite expensive.

Overall, Vertex AI Studio is a promising product that could potentially compete strongly with Amazon Bedrock and Azure AI Studio. On the other hand, Google has been busy shooting itself in the foot. The company mismanaged its Gemini Image rollout to the point where co-founder Sergey Brin returned from wherever he has been hiding to say “We definitely messed up on the image generation.” I won’t repeat my rant about Google’s history of eating its young from my review of Project IDX, but you can read it at the end of that article.

There are lots of good things about Vertex AI Studio, including its use of Google’s own models, its rapid adoption and deployment of new models from other vendors, and its straightforward support for RAG and model tuning. Meanwhile, why are the Generative AI Extensions—potentially the most useful facility of Vertex AI Studio, and the piece that competes with OpenAI GPTs—buried in a private preview?

As far as which AI app building platform you should choose, if you’re already heavily invested in the Google Cloud, then using Google Vertex AI Studio for building AI apps is probably a no-brainer, as long as you can get access to all the models and capabilities that you need, rather than be told that you can’t have them because they are in private preview.

Given Google’s investment in its cloud platform, I don’t seriously expect Vertex AI Studio to be killed outright in the next couple of years, but I wouldn’t be at all surprised by yet another rebranding.

—

Cost: Cost is based on usage. See https://cloud.google.com/vertex-ai/pricing#generative_ai_models.

Platform: Google Cloud Platform.

Next read this:

Posted by Richy George on 25 March, 2024