Dell Latitude E6410 Notebook| Quantity Available: 40+

This post is intended for businesses and other organizations interested... Read more →

Posted by Richy George on 4 July, 2024

Oracle celebrated the beginning of July with the general availability of three releases of its open source database, MySQL: MySQL 8.0.38, the first update of its long-term support (LTS) version, MySQL 8.4, and the first major version of its 9.x innovation release, MySQL 9.0.

While the v8 releases are bug fixes and security releases only, MySQL 9.0 Innovation is a shiny new version with additional features, as well as some changes that may require attention when upgrading from a previous version.

The new 9.0 versions of MySQL Clients, Tools, and Connectors are also live, and Oracle recommends that they be used with MySQL Server 8.0, and 8.4 LTS as well as with 9.0 Innovation.

This initial 9.x Innovation release, Oracle says, is preparation for new features in upcoming releases. But it still contains useful things and can be upgraded to from MySQL 8.4 LTS; the MySQL Configurator automatically does the upgrade without user intervention during MSI installations on Windows.

The major changes include:

Insecure and elderly SHA-1, after being deprecated in MySQL 8, is gone, and the server now rejects mysql_native authentication requests from older client programs which do not have CLIENT_PLUGIN_AUTH capability. Before upgrading to 9.0, Oracle says, user accounts in 8.0 and 8.4 must be altered from mysql_native_password to caching_sha2_password.

In the Optimizer, ER_SUBQUERY_NO_1_ROW has been removed from the list of errors which are ignored by statements which include the IGNORE keyword. This change can make an UPDATE, DELETE, or INSERT statement which includes the IGNORE keyword raise errors if it contains a SELECT statement with a scalar subquery that produces more than one row.

MySQL is now on a three-month release cadence, with major LTS releases every two years. In October, Oracle says we can expect bug and security releases MySQL 8.4.2 LTS and MySQL 8.0.39, and the MySQL 9.1 Innovation release, with new features as well as bug and security fixes.

Next read this:

Posted by Richy George on 2 July, 2024

Open-source vector database provider Qdrant has launched BM42, a vector-based hybrid search algorithm intended to provide more accurate and efficient retrieval for retrieval-augmented generation (RAG) applications. BM42 combines the best of traditional text-based search and vector-based search to lower the costs for RAG and AI applications, Qdrant said.

Qdrant’s BM42 was announced July 2. Traditional keyword search engines, using algorithms such as BM25, have been around for more than 50 years and are not optimized for the precise retrieval needed in modern applications, according to Qdrant. As a result they struggle with specific RAG demands, particularly with short segments requiring further context to inform successful search and retrieval. Moving away from a keyword-based search to a fully vectorized based offers a new industry standard, Qdrant said.

“BM42, for short texts which are more prominent in RAG scenarios, provides the efficiency of traditional text search approaches, plus the context of vectors, so is more flexible, precise, and efficient,” Andrey Vasnetsov, Qdrant CTO and co-founder, said. This helps to make vector search more universally applicable, he added.

Unlike traditional keyword-based search suited for long-form content, BM42 integrates sparse and dense vectors to pinpoint relevant information within a document. A sparse vector handles exact term matching, while dense vectors handle semantic relevance and deep meaning, according to the company.

Next read this:

Posted by Richy George on 26 June, 2024

Buoyed by customer demand, SingleStore, the company behind the relational database SingleStoreDB, has decided to natively integrate Apache Iceberg into its offering to help its enterprise customers make use of data stored in data lakehouses.

“With this new integration, SingleStore aims to transform the dormant data inside lakehouses into a valuable real-time asset for enterprise applications. Apache Iceberg, a popular open standard for data lakehouses, provides CIOs with cost-efficient storage and querying of large datasets,” said Dion Hinchcliffe, senior analyst at The Futurum Group.

Hinchcliffe pointed out that SingleStore’s integration includes updates that help its customers bypass the challenges that they may typically face when adopting traditional methods to make the data in Iceberg tables more immediate.

These challenges include complex, extensive ETL (extract, transform, load) workflows and compute-intensive Spark jobs.

Some of the key features of the integration are low-latency ingestion, bi-directional data flow, and real-time performance at lower costs, the company said.

Explaining how SingleStore achieves low latency across queries and updates, IDC research vice president Carl Olofson said that the company —formerly known as MemSQL — a memory-optimized and high-performance version of the relational database management system — uses memory features as a sort of cache.

“By doing so, the company can dramatically improve the speed with which Iceberg tables can be queried and updated,” Olofson explained, adding that the company might be proactively loading data from Iceberg into their internal memory-optimized format.

Before the Iceberg integration, SingleStore held data in a form or format that is optimized for rapid swapping into memory, where all data processing took place, the analyst said.

Several other database vendors, notably Databricks, have made attempts to adopt the Apache Iceberg table format due to its rising popularity with enterprises.

Earlier this month, Databricks agreed to acquire Tabular, the storage platform vendor led by the creators of Apache Iceberg, in order to promote data interoperability in lakehouses.

Another data lakehouse format — Delta Live Tables — developed by Databricks and later open sourced via The Linux Foundation, competes with Iceberg tables.

Currently, the company is working on another format that allows enterprises to use both Iceberg and Delta Live tables.

Both Olofson and Hinchcliffe pointed out that several vendors and offerings — such as Google’s BigQuery, Starburst, IBM’s Watsonx.data, SAP’s DataSphere, Teradata, Cloudera, Dremio, Presto, Hive, Impala, StarRocks, and Doris — have integrated Iceberg as an open source analytics table format for very large datasets.

The native integration of Iceberg into SingleStoreDB is currently in public preview.

As part of the updates to SingleStoreDB, the company is adding new capabilities to its full-text search feature that improve relevance scoring, phonetic similarity, fuzzy matching, and keyword proximity-based ranking.

The combination of these capabilities allows enterprises to eliminate the need for additional specialty databases to build generative AI-based applications, the company explained.

Additionally, the company has introduced an autoscaling feature in public preview that allows enterprises to manage workloads or applications by scaling compute resources up or down.

It also lets users define thresholds for CPU and memory usage for autoscaling, to avoid any unnecessary consumption.

Further, the company said it is introducing a new deployment option for the database via Helios -BYOC, which is a managed version of the database via a virtual private cloud.

This offering is now available in private preview in AWS and enterprise customers can run SingleStore in their own tenants while complying with data residency and governance policies, the company said.

Next read this:

Posted by Richy George on 26 June, 2024

Oracle is adding new generative AI-focused features to its Heatwave data analytics cloud service, previously known as MySQL HeatWave.

The new name highlights how HeatWave offers more than just MySQL support, and also includes HeatWave Gen AI, HeatWave Lakehouse, and HeatWave AutoML, said Nipun Agarwal, senior vice president of HeatWave at Oracle.

At its annual CloudWorld conference in September 2023, Oracle previewed a series of generative AI-focused updates for what was then MySQL HeatWave.

These updates included an interface driven by a large language model (LLM), enabling enterprise users to interact with different aspects of the service in natural language, a new Vector Store, Heatwave Chat, and AutoML support for HeatWave Lakehouse.

Some of these updates, along with additional capabilities, have been combined to form the HeatWave Gen AI offering inside HeatWave, Oracle said, adding that all these capabilities and features are now generally available at no additional cost.

In a first among database vendors, Oracle has added support for LLMs inside a database, analysts said.

HeatWave Gen AI’s in-database LLM support, which leverages smaller LLMs with fewer parameters such as Mistral-7B and Meta’s Llama 3-8B running inside the database, is expected to reduce infrastructure cost for enterprises, they added.

“This approach not only reduces memory consumption but also enables the use of CPUs instead of GPUs, making it cost-effective, which given the cost of GPUs will become a trend at least in the short term until AMD and Intel catch up with Nvidia,” said Ron Westfall, research director at The Futurum Group.

Another reason to use smaller LLMs inside the database is the ability to have more influence on the model with fine tuning, said David Menninger, executive director at ISG’s Ventana Research.

“With a smaller model the context provided via retrieval augmented generation (RAG) techniques has a greater influence on the results,” Menninger explained.

Westfall also gave the example of IBM’s Granite models, saying that the approach to using smaller models, especially for enterprise use cases, was becoming a trend.

The in-database LLMs, according to Oracle, will allow enterprises to search data, generate or summarize content, and perform RAG with HeatWave’s Vector Store.

Separately, HeatWave Gen AI also comes integrated with the company’s OCI Generative Service, providing enterprises with access to pre-trained and other foundational models from LLM providers.

A number of database vendors that didn’t already offer specialty vector databases have added vector capabilities to their wares over the last 12 months—MongoDB, DataStax, Pinecone, and CosmosDB for NoSQL among them — enabling customers to build AI and generative AI-based use cases over data stored in these databases without moving data to a separate vector store or database.

Oracle’s Vector Store, already showcased in September, automatically creates embeddings after ingesting data in order to process queries faster.

Another capability added to HeatWave Gen AI is scale-out vector processing that will allow HeatWave to support VECTOR as a data type and in turn help enterprises process queries faster.

“Simply put, this is like adding RAG to a standard relational database,” Menninger said. “You store some text in a table along with an embedding of that text as a VECTOR data type. Then when you query, the text of your query is converted to an embedding. The embedding is compared to those in the table and the ones with the shortest distance are the most similar.”

Another new capability added to HeatWave Gen AI is HeatWave Chat—a Visual Code plug-in for MySQL Shell which provides a graphical interface for HeatWave GenAI and enables developers to ask questions in natural language or SQL.

The retention of chat history makes it easier for developers to refine search results iteratively, Menninger said.

HeatWave Chat comes in with another feature dubbed the Lakehouse Navigator, which allows enterprise users to select files from object storage to create a new vector store.

This integration is designed to enhance user experience and efficiency of developers and analysts building out a vector store, Westfall said.

Next read this:

Posted by Richy George on 25 June, 2024

DataStax is updating its tools for building generative AI-based applications in an effort to ease and accelerate application development for enterprises, databases, and service providers.

One of these tools is Langflow, which DataStax acquired in April. It is an open source, web-based no-code graphical user interface (GUI) that allows developers to visually prototype LangChain flows and iterate them to develop applications faster.

LangChain is a modular framework for Python and JavaScript that simplifies the development of applications that are powered by generative AI language models or LLMs.

According to the company’s Chief Product Officer Ed Anuff, the update to Langflow is a new version dubbed Langflow 1.0, which is the official open source release that comes after months of community feedback on the preview.

“Langflow 1.0 adds more flexible, modular components and features to support complex AI pipelines required for more advanced retrieval augmented generation (RAG) techniques and multi-agent architectures,” Anuff said, adding that Langflow’s execution engine was now Turing complete.

Turing complete or completeness is a term used in computer science to describe a programmable system that can carry out or solve any computational problem.

Langflow 1.0 also comes with LangSmith integration that will allow enterprise developers to monitor LLM-based applications and perform observability on them, the company said.

A managed version of Langflow is also being made available via DataStax in a public preview.

“Astra DB environment details will be available in Langflow and users will be able to access Langflow via the Astra Portal, and usage will be free,” Anuff explained.

DataStax has also released a new version of RAGStack, its curated stack of open-source software for implementing RAG in generative AI-based applications using Astra DB Serverless or Apache Cassandra as a vector store.

The new version, dubbed RAGStack 1.0, comes with new features such as Langflow, Knowledge Graph RAG, and ColBERT among others.

The Knowledge Graph RAG feature, according to the company, provides an alternative way to retrieve information using a graph-based representation. This alternative method can be more accurate than vector-based similarity search alone with Astra DB, it added.

Other features include the introduction of Text2SQL and Text2CQL (Cassandra Query Language) to bring all kinds of data into the generative AI flow for application development.

While DataStax offers a separate non-managed version of RAGStack 1.0 under the name Luna for RAGStack, Anuff said that the managed version offers more value for enterprises.

“RAGStack is based on open source components, and you could take all of those projects and stitch them together yourself. However, we think there is a huge amount of value for companies in getting their stack tested and integrated for them, so they can trust that it will deliver at scale in the way that they want,” the chief product officer explained.

The company has also partnered with several other companies such as Unstructured to help developers extract and transform data to be stored in AstraDB for building generative AI-based applications.

“The partnership with Unstructured provides DataStax customers with the ability to use the latter’s capabilities to extract and transform data in multiple formats – including HTML, PDF, CSV, PNG, PPTX – and convert it into JSON files for use in AI initiatives,” said Matt Aslett, director at ISG’s Ventana Research.

Other partnerships include collaboration with the top embedding providers, such as OpenAI, Hugging Face, Mistral AI, and Nvidia among others.

Next read this:

Posted by Richy George on 24 June, 2024

When I reviewed Amazon CodeWhisperer, Google Bard, and GitHub Copilot in June of 2023, CodeWhisperer could generate code in an IDE and did security reviews, but it lacked a chat window and code explanations. The current version of CodeWhisperer is now called Amazon Q Developer, and it does have a chat window that can explain code, and several other features that may be relevant to you, especially if you do a lot of development using AWS.

Amazon Q Developer currently runs in Visual Studio Code, Visual Studio, JetBrains IDEs, the Amazon Console, and the macOS command line. Q Developer also offers asynchronous agents, programming language translations, and Java code transformations/upgrades. In addition to generating, completing, and discussing code, Q Developer can write unit tests, optimize code, scan for vulnerabilities, and suggest remediations. It supports conversations in English, and code in the Python, Java, JavaScript, TypeScript, C#, Go, Rust, PHP, Ruby, Kotlin, C, C++, shell scripting, SQL, and Scala programming languages.

You can chat with Amazon Q Developer about AWS capabilities, and ask it to review your resources, analyze your bill, or architect solutions. It knows about AWS well-architected patterns, documentation, and solution implementation.

According to Amazon, Amazon Q Developer is “powered by Amazon Bedrock” and trained on “high-quality AWS content.” Since Bedrock supports many foundation models, it’s not clear from the web statement which one was used for Amazon Q Developer. I asked, and got this answer from an AWS spokesperson: “Amazon Q uses multiple models to execute its tasks and uses logic to route tasks to the model that is the best fit for the job.”

Amazon Q Developer has a reference tracker that detects whether a code suggestion might be similar to publicly available code. The reference tracker can label these with a repository URL and project license information, or optionally filter them out.

Amazon Q Developer directly competes with GitHub Copilot, JetBrains AI, and Tabnine, and indirectly competes with a number of large language models (LLMs) and small language models (SLMs) that know about code, such as Code Llama, StarCoder, Bard, OpenAI Codex, and Mistral Codestral. GitHub Copilot can converse in dozens of natural languages, as opposed to Amazon Q Developer’s one, and supports a number of extensions from programming, cloud, and database vendors, as opposed to Amazon Q Developer’s AWS-only ties.

Given the multiple environments in which Amazon Q Developer can run, it’s not a surprise that there are multiple installers. The only tricky bit is signing and authentication.

You can install Amazon Q Developer from the Visual Studio Code Marketplace, or from the Extensions sidebar in Visual Studio Code. You can get to that sidebar from the Extensions icon at the far left, by pressing Shift-Command-X, or by choosing Extensions: Install Extensions from the command palette. Type “Amazon Q” to find it. Once you’ve installed the extension, you’ll need to authenticate to AWS as discussed below.

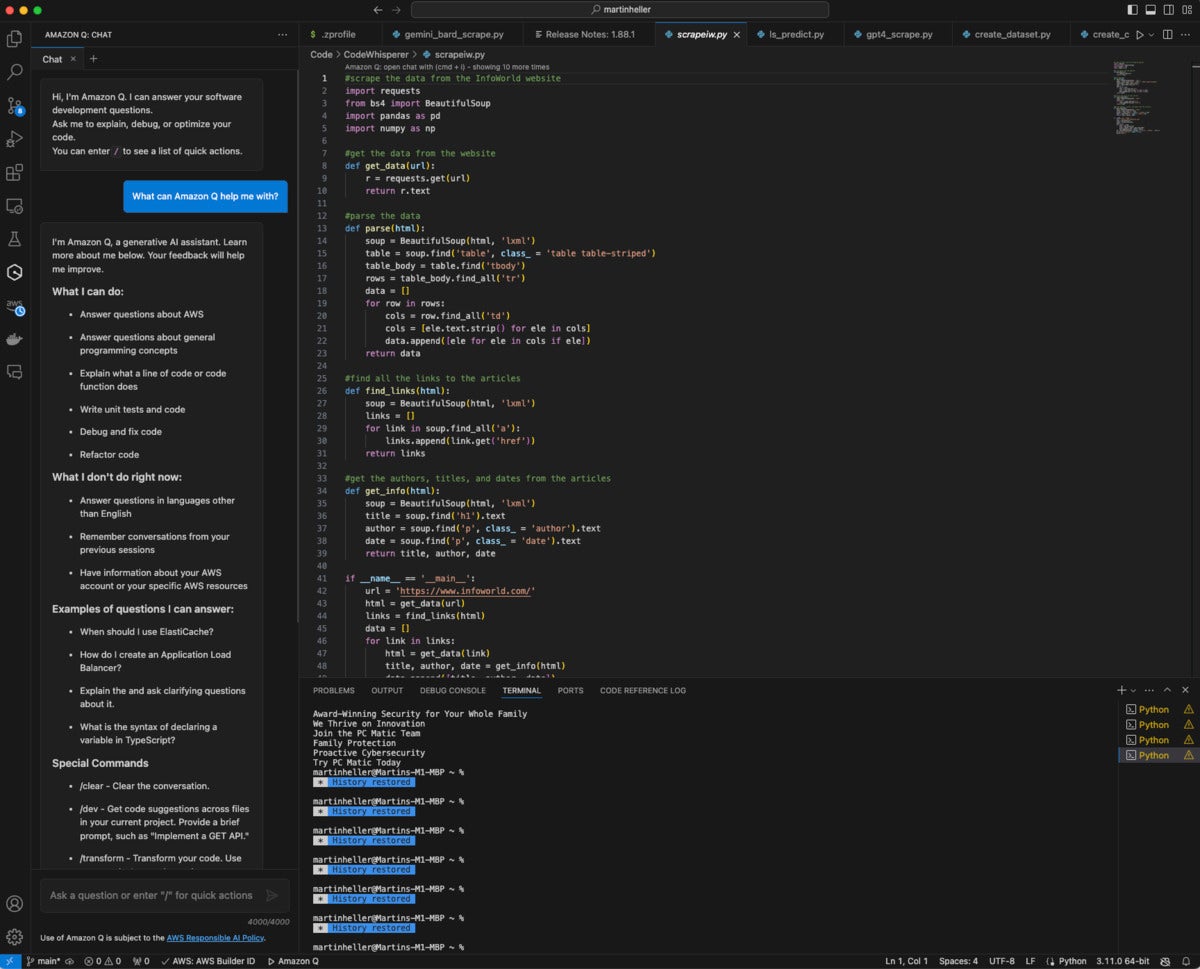

Amazon Q Developer in Visual Studio Code includes a chat window (at the left) as well as code generation. The chat window is showing Amazon Q Developer’s capabilities.

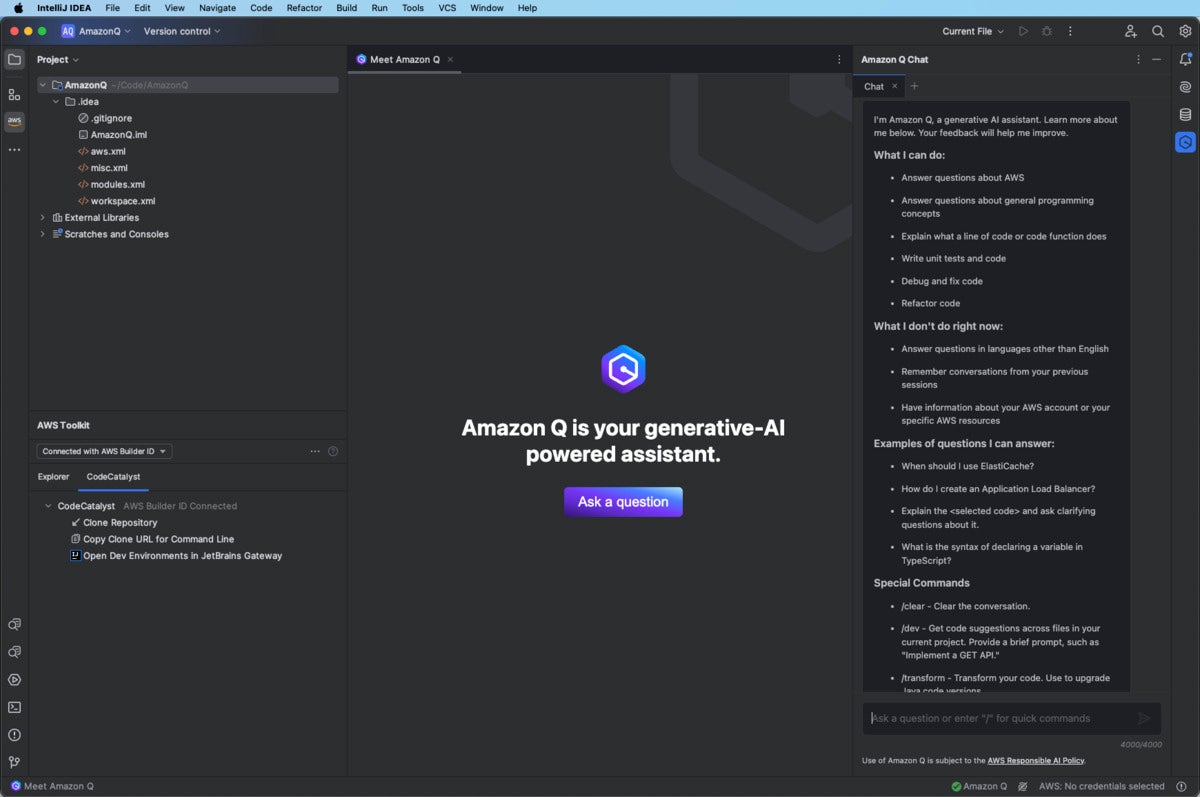

Like Visual Studio Code, JetBrains has a marketplace for IDE plugins, where Amazon Q Developer is available. You’ll need to reboot the IDE after downloading and installing the plugin. Then you’ll need to authenticate to AWS as discussed below. Note that the Amazon Q Developer plugin disables local inline JetBrains full-line code completion.

Amazon Q Developer in IntelliJ IDEA, and other JetBrains IDEs, has a chat window on the right as well as code completion. The chat window is showing Amazon Q Developer’s capabilities.

For Visual Studio, Amazon Q Developer is part of the AWS Toolkit, which you can find it in the Visual Studio Marketplace. Again, once you’ve installed the toolkit you’ll need to authenticate to AWS as discussed below.

The authentication process is confusing because there are several options and several steps that bounce between your IDE and web browser. You used to have to repeat this process frequently, but the product manager assures me that re-authentication should now only be necessary every three months.

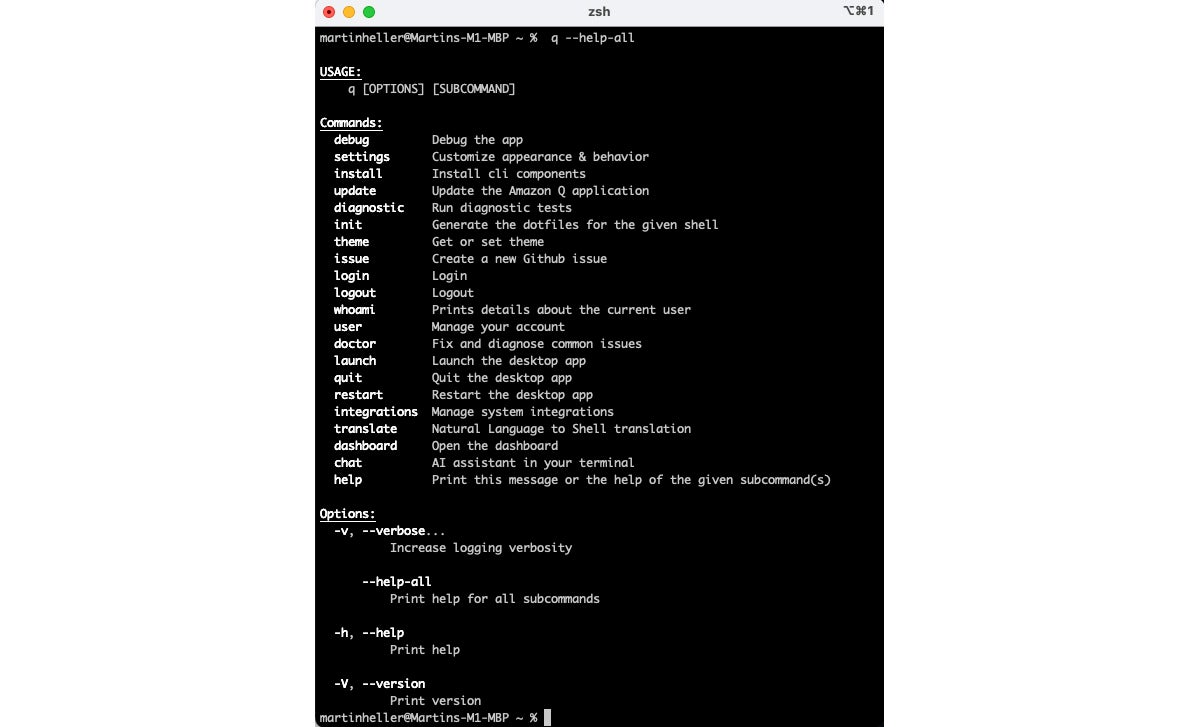

Amazon Q Developer for the command line is currently for macOS only, although a Linux version is on the roadmap and documented as a remote target. The macOS installation is basically a download of a DMG file, followed by running the disk image, dragging the Q file to the applications directory, and running that Q app to install the CLI q program and a menu bar icon that can bring up settings and the web user guide. You’ll also need to authenticate to AWS, which will log you in.

On macOS, the command-line program q supports multiple shell programs and multiple terminal programs. Here I’m using iTerm2 and the z shell. The q translate command constructs shell commands for you, and the q chat command opens an AI assistant.

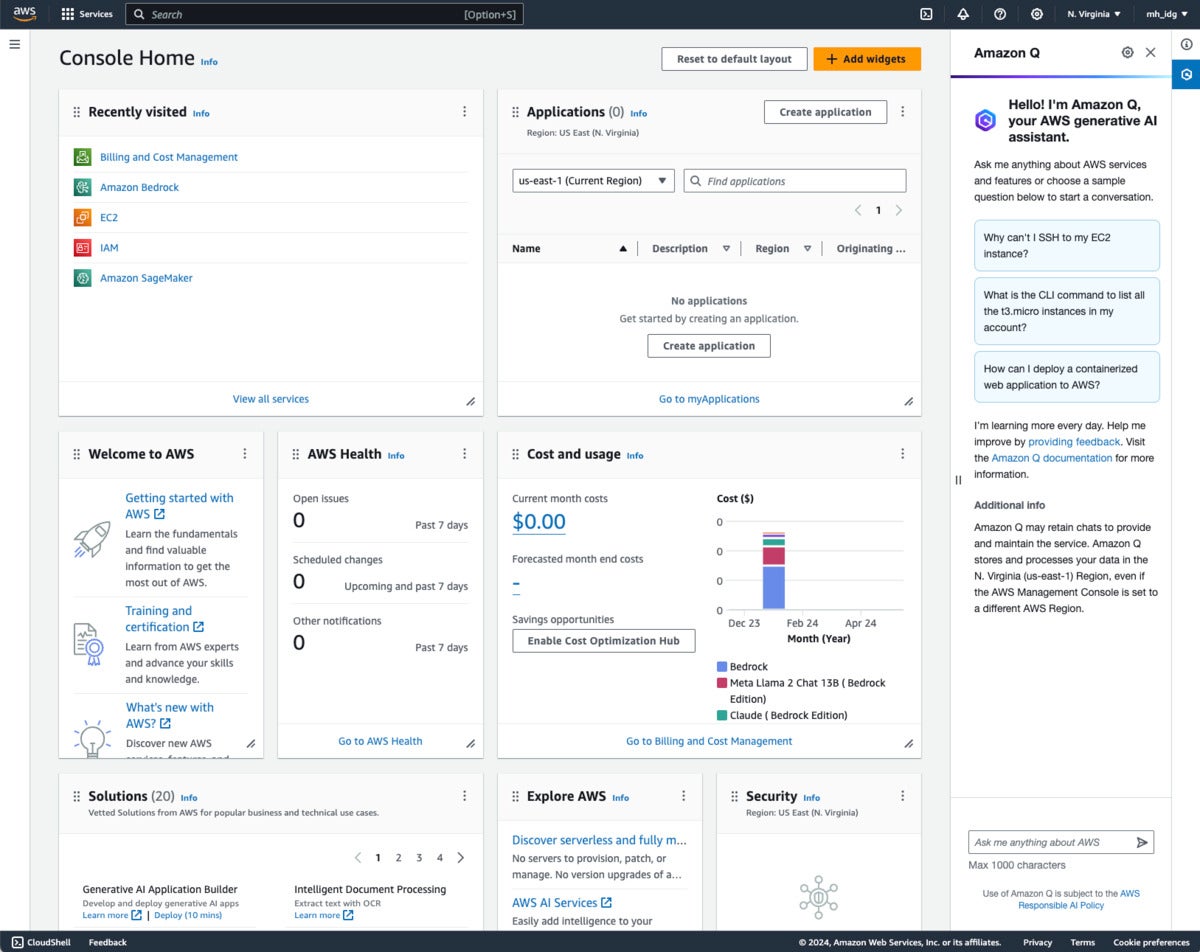

If you are running as an IAM user rather than a root user, you’ll have to add IAM permissions to use Amazon Q Developer. Once you have permission, AWS should display an icon at the right of the screen that brings up the Amazon Q Developer interface.

The Amazon Q Developer window at the right, running in the AWS Console, can chat with you about using AWS and can generate architectures and code for AWS applications.

According to AWS, “Amazon Q Developer Agent achieved the highest scores of 13.4% on the SWE-Bench Leaderboard and 20.5% on the SWE-Bench Leaderboard (Lite), a data set that benchmarks coding capabilities. Amazon Q security scanning capabilities outperform all publicly benchmarkable tools on detection across the most popular programming languages.”

Both of the quoted numbers are reflected on the SWE-Bench site, but there are two issues. Neither number has as yet been verified by SWE-Bench, and the Amazon Q Developer ranking on the Lite Leaderboard has dropped to #3. In addition, if there’s a supporting document on the web for Amazon’s security scanning claim, it has evaded my searches.

SWE-Bench, from Cornell, is “an evaluation framework consisting of 2,294 software engineering problems drawn from real GitHub issues and corresponding pull requests across 12 popular Python repositories.” The scores reflect the solution rates. The Lite data set is a subset of 300 GitHub issues.

Let’s explore how Amazon Q Developer behaves on the various tasks it supports in some of the 15 programming languages it supports. This is not a formal benchmark, but rather an attempt to get a feel for how well it works. Bear in mind that Amazon Q Developer is context sensitive and tries to use the persona that it thinks best fits the environment where you ask it for help.

I tried a softball question for predictive code generation and used one of Amazon’s inline suggestion examples. The Python prompt supplied was # Function to upload a file to an S3 bucket. Pressing Option-C as instructed got me the code below the prompt in the screenshot below, after an illegal character that I had to delete. I had to type import at the top to prompt Amazon Q to generate the imports for logging, boto3, and ClientError.

I also used Q Chat to tell me how to resolve the imports; it suggested a pip command, but on my system that fixed the wrong Python environment (v 3.11). I had to do a little sleuthing in the Frameworks directory tree to remind myself to use pip3 to target my current Python v 3.12 environment. I felt like singing “Daisy, Daisy” to Dave and complaining that my mind was going.

Inline code generation and chat with Amazon Q Developer. All the code below the # TODO comment was generated by Amazon Q Developer, although it took multiple steps.

I also tried Amazon’s two other built-in inline suggestion examples. The example to complete an array of fake users in Python mostly worked; I had to add the closing ] myself. The example to generate unit tests failed when I pressed Option-C: It generated illegal characters instead of function calls. (I’m starting to suspect an issue with Option-C in VS Code on macOS. It may or may not have anything to do with Amazon Q Developer.)

When I restarted VS Code, tried again, and this time pressed Return on the line below the comment, it worked fine, generating the test_sum function below.

# Write a test case for the above function. def test_sum(): """ Unit test for the sum function. """ assert sum(1, 2) == 3 assert sum(-1, 2) == 1 assert sum(0, 0) == 0

AWS shows examples of completion with Amazon Q Developer in up to half a dozen programming languages in its documentation. The examples, like the Python ones we’ve discussed, are either very simple, e.g. add two numbers, or relate to common AWS operations supported by APIs, such as uploading files to an S3 bucket.

Since I now believed that Amazon Q Developer can generate Python, especially for its own test examples, I tried something a little different. As shown in the screenshot below, I created a file called quicksort.cpp, then typed an initial comment:

//function to sort a vector of generics in memory using the quicksort algorithm

Amazon Q Developer kept trying to autocomplete this comment, and in some cases the implementation as well, for different problems. Nevertheless it was easy to keep typing my specification while Amazon Q Developer erased what it had generated, and Amazon Q Developer eventually generated a nearly correct implementation.

Quicksort is a well-known algorithm. Both the C and C++ libraries have implementations of it, but they don’t use generics. Instead, you need to write type-specific comparison functions to pass to qsort. That’s historic, as the libraries were implemented before generics were added to the languages.

Page 2

I eventually got Amazon Q Developer to generate the main routine to test the implementation. It initially generated documentation for the function instead, but when I rejected that and tried again it generated the main function with a test case.

Unsurprisingly, the generated code didn’t even compile the first time. I saw that Amazon Q Developer had left out the required #include <iostream>, but I let VS Code correct that error without sending any code to Amazon Q Developer or entering the #include myself.

It still didn’t compile. The errors were in the recursive calls to sortVector(), which were written in a style that tried to be too clever. I highlighted and sent one of the error messages to Amazon Q Developer for a fix, and it solved a different problem. I tried again, giving Amazon Q Developer more context and asking for a fix; this time it recognized the actual problem and generated correct code.

This experience was a lot like pair programming with an intern or a junior developer who hadn’t learned much C++. An experienced C/C++ programmer might have asked to recast the problem to use the qsort library function, on grounds of using the language library. I would have justified my specification to use generics on stylistic grounds as well as possible runtime efficiency grounds.

Another consideration here is that there’s a well-known worst case for qsort, which takes a maximum time to run when the vector to be sorted is already in order. For this implementation, there’s a simple fix to be made by randomizing the partition point (see Knuth, The Art of Computer Programming: Sorting and Searching, Volume 3). If you use the library function you just have to live with the inefficiency.

Amazon Q Developer code generation from natural language to C++. I asked for a well-known sorting algorithm, quicksort, and complicated the problem slightly by specifying that the function operate on a vector of generics. It took several fixes, but got there eventually.

So far, none of my experiments with Amazon Q Developer have generated code references, which are associated with recommendations that are similar to training data. I do see a code reference log in Visual Studio Code, but it currently just says “Don’t want suggestions that include code with references? Uncheck this option in Amazon Q: Settings.”

By default, Q Developer scans your open code files for vulnerabilities in the background, and generates squiggly underlines when it finds them. From there you can bring up explanations of the vulns and often invoke automatic fixes for them. You can also ask Q to scan your whole project for vulnerabilities and generate a report. Scans look for security issues such as as resource leaks, SQL injection, and cross-site scripting; secrets such as hardcoded passwords, database connection strings, and usernames; misconfiguration, compliance, and security issues in infrastructure as code files; and deviations from quality and efficiency best practices.

You’ve already seen how you can use Q Chat in an IDE to explain and fix code. It can also optimize code and write unit tests. You can go back to the first screenshot in this review to see Q Chat’s summary of what it can and can’t do, or use the /help command yourself once you have Q Chat set up in your IDE. On the whole, having Q Chat in Amazon Q Developer improves the product considerably over last year’s CodeWhisperer.

If you set up Amazon Q Developer at the Pro level, you can customize its code generation of Python, Java, JavaScript, and TypeScript by giving it access to your code base. The code base can be in an S3 bucket or in a repository on GitHub, GitLab, or Bitbucket.

Running a customization generates a fine-tuned model that your users can choose to use for their code suggestions. They’ll still be able to use the default base model, but companies have reported that using customized code generation increases developer productivity even more than using the base model.

Developer agents are long-running Amazon Q Developer processes. The one agent I’ve seen so far is for code transformation, specifically transforming Java 8 or Java 11 Maven projects to Java 17. There are a bunch of specific requirements your Java project needs to meet for a successful transformation, but the transformation agent worked well in AWS’s internal tests. While I have seen it demonstrated, I haven’t run it myself.

Amazon Q Developer for the CLI currently (v 1.2.0) works in macOS; supports the bash, zsh, and fish shells; runs in the iTerm2, macOS Terminal, Hyper, Alacritty, Kitty, and wezTerm terminal emulators; runs in the VS Code terminal and JetBrains terminals (except Fleet); and supports some 500 of the most popular CLIs such as git, aws, docker, npm, and yarn. You can extend the CLI to remote macOS systems with q integrations install ssh. You can also extend it to 64-bit versions of recent distributions of Fedora, Ubuntu, and Amazon Linux 2023. (That one’s not simple, but it’s documented.)

Amazon Q Developer CLI performs three major services. It can autocomplete your commands as you type, it can translate natural language specifications to CLI commands (q translate), and it can chat with you about how to perform tasks from the command line (q chat).

For example, I often have trouble remembering all the steps it takes to rebase a Git repository, which is something you might want to do if you and a colleague are working on the same code (careful!) on different branches (whew!). I asked q chat, “How can I rebase a git repo?”

It gave me the response in the first screenshot below. To get brushed up on how the action works, I asked the follow-up question, “What does rebasing really mean?” It gave me the response in the second screenshot below. Finally, to clarify the reasons why I would rebase my feature branch versus merging it with an updated branch, I asked, “Why rebase a repo instead of merging branches?” It gave me the response in the third screenshot below.

The simple answer to the question I meant to ask is item 2, which talks about the common case where the main branch is changing while you work on a feature. The real, overarching answer is at the end: “The decision to rebase or merge often comes down to personal preference and the specific needs of your project and team. It’s a good idea to discuss your team’s Git workflow and agree on when to use each approach.”

In the first screenshot above, I asked q chat, “How can I rebase a git repo?” In the second screenshot, I asked “What does rebasing really mean?” In the third, I asked “Why rebase a repo instead of merging branches?”

As you saw earlier in this review, a small Q icon at the upper right of the AWS Management Console window brings up a right-hand column where Amazon Q Developer invites you to “Ask me anything about AWS.” Similarly a large Q icon at the bottom right of an AWS documentation page brings up that same AMAaA column as a modeless floating window.

Overall, I like Amazon Q Developer. It seems to be able to handle the use cases for which it was trained, and generate whole functions in common programming languages with only a few fixes. It can be useful for completing lines of code, doc strings, and if/for/while/try code blocks as you type. It’s also nice that it scans for vulnerabilities and can help you fix code problems.

On the other hand, Q Developer can’t generate full functions for some use cases; it then reverts to line-by-line suggestions. Also, there seems to be a bug associated with the use of Option-C to trigger code generation. I hope that will be fixed fairly soon, but the workaround is to press Return a lot.

According to Amazon, a 33% acceptance rate is par for the course for AI code generators. By acceptance rate, they mean the percentage of generated code that is used by the programmer. They claim a higher rate than that, even for their base model without customization. They also claim over 50% boosts in programmer productivity, although how they measure programmer productivity isn’t clear to me.

Their claim is that customizing the Amazon Q Developer model to “the way we do things here” from the company’s code base offers an additional boost in acceptance rate and programmer productivity. Note that code bases need to be cleaned up before using them for training. You don’t want the model learning bad, obsolete, or unsafe coding habits.

I can believe a hefty productivity boost for experienced developers from using Amazon Q Developer. However, I can’t in good conscience recommend that programming novices use any AI code generator until they have developed their own internal sense for how code should be written, validated, and tested. One of the ways that LLMs go off the rails is to start generating BS, also called hallucinating. If you can’t spot that, you shouldn’t rely on their output.

How does Amazon Q Developer compare to GitHub Copilot, JetBrains AI, and Tabnine? Stay tuned. I need to reexamine GitHub Copilot, which seems to get updates on a monthly basis, and take a good look at JetBrains AI and Tabnine before I can do that comparison properly. I’d bet good money, however, that they’ll all have changed in some significant way by the time I get through my full round of reviews.

—

Cost: Free with limited monthly access to advanced features; Pro tier $19/month.

Platform: Amazon Web Services. Supports Visual Studio Code, Visual Studio, JetBrains IDEs, the Amazon Console, and the macOS command line. Supports recent 64-bit Fedora, Ubuntu, and Amazon Linux 2023 as remote targets from macOS ssh.

Next read this:

Posted by Richy George on 17 June, 2024

“Turn your enterprise data into production-ready LLM applications,” blares the LlamaIndex home page in 60 point type. OK, then. The subhead for that is “LlamaIndex is the leading data framework for building LLM applications.” I’m not so sure that it’s the leading data framework, but I’d certainly agree that it’s a leading data framework for building with large language models, along with LangChain and Semantic Kernel, about which more later.

LlamaIndex currently offers two open source frameworks and a cloud. One framework is in Python; the other is in TypeScript. LlamaCloud (currently in private preview) offers storage, retrieval, links to data sources via LlamaHub, and a paid proprietary parsing service for complex documents, LlamaParse, which is also available as a stand-alone service.

LlamaIndex boasts strengths in loading data, storing and indexing your data, querying by orchestrating LLM workflows, and evaluating the performance of your LLM application. LlamaIndex integrates with over 40 vector stores, over 40 LLMs, and over 160 data sources. The LlamaIndex Python repository has over 30K stars.

Typical LlamaIndex applications perform Q&A, structured extraction, chat, or semantic search, and/or serve as agents. They may use retrieval-augmented generation (RAG) to ground LLMs with specific sources, often sources that weren’t included in the models’ original training.

LlamaIndex competes with LangChain, Semantic Kernel, and Haystack. Not all of these have exactly the same scope and capabilities, but as far as popularity goes, LangChain’s Python repository has over 80K stars, almost three times that of LlamaIndex (over 30K stars), while the much newer Semantic Kernel has over 18K stars, a little over half that of LlamaIndex, and Haystack’s repo has over 13K stars.

Repository age is relevant because stars accumulate over time; that’s also why I qualify the numbers with “over.” Stars on GitHub repos are loosely correlated with historical popularity.

LlamaIndex, LangChain, and Haystack all boast a number of major companies as users, some of whom use more than one of these frameworks. Semantic Kernel is from Microsoft, which doesn’t usually bother publicizing its users except for case studies.

The LlamaIndex framework helps you to connect data, embeddings, LLMs, vector databases, and evaluations into applications. These are used for Q&A, structured extraction, chat, semantic search, and agents.

At a high level, LlamaIndex is designed to help you build context-augmented LLM applications, which basically means that you combine your own data with a large language model. Examples of context-augmented LLM applications include question-answering chatbots, document understanding and extraction, and autonomous agents.

The tools that LlamaIndex provides perform data loading, data indexing and storage, querying your data with LLMs, and evaluating the performance of your LLM applications:

LLMs have been trained on large bodies of text, but not necessarily text about your domain. There are three major ways to perform context augmentation and add information about your domain, supplying documents, doing RAG, and fine-tuning the model.

The simplest context augmentation method is to supply documents to the model along with your query, and for that you might not need LlamaIndex. Supplying documents works fine unless the total size of the documents is larger than the context window of the model you’re using, which was a common issue until recently. Now there are LLMs with million-token context windows, which allow you to avoid going on to the next steps for many tasks. If you plan to perform many queries against a million-token corpus, you’ll want to cache the documents, but that’s a subject for another time.

Retrieval-augmented generation combines context with LLMs at inference time, typically with a vector database. RAG procedures often use embedding to limit the length and improve the relevance of the retrieved context, which both gets around context window limits and increases the probability that the model will see the information it needs to answer your question.

Essentially, an embedding function takes a word or phrase and maps it to a vector of floating point numbers; these are typically stored in a database that supports a vector search index. The retrieval step then uses a semantic similarity search, often using the cosine of the angle between the query’s embedding and the stored vectors, to find “nearby” information to use in the augmented prompt.

Fine-tuning LLMs is a supervised learning process that involves adjusting the model’s parameters to a specific task. It’s done by training the model on a smaller, task-specific or domain-specific data set that’s labeled with examples relevant to the target task. Fine-tuning often takes hours or days using many server-level GPUs and requires hundreds or thousands of tagged exemplars.

You can install the Python version of LlamaIndex three ways: from the source code in the GitHub repository, using the llama-index starter install, or using llama-index-core plus selected integrations. The starter installation would look like this:

pip install llama-index

This pulls in OpenAI LLMs and embeddings in addition to the LlamaIndex core. You’ll need to supply your OpenAI API key (see here) before you can run examples that use it. The LlamaIndex starter example is quite straightforward, essentially five lines of code after a couple of simple setup steps. There are many more examples in the repo, with documentation.

Doing the custom installation might look something like this:

pip install llama-index-core llama-index-readers-file llama-index-llms-ollama llama-index-embeddings-huggingface

That installs an interface to Ollama and Hugging Face embeddings. There’s a local starter example that goes with this installation. No matter which way you start, you can always add more interface modules with pip.

If you prefer to write your code in JavaScript or TypeScript, use LlamaIndex.TS (repo). One advantage of the TypeScript version is that you can run the examples online on StackBlitz without any local setup. You’ll still need to supply an OpenAI API key.

LlamaCloud is a cloud service that allows you to upload, parse, and index documents and search them using LlamaIndex. It’s in a private alpha stage, and I was unable to get access to it. LlamaParse is a component of LlamaCloud that allows you to parse PDFs into structured data. It’s available via a REST API, a Python package, and a web UI. It is currently in a public beta. You can sign up to use LlamaParse for a small usage-based fee after the first 7K pages a week. The example given comparing LlamaParse and PyPDF for the Apple 10K filing is impressive, but I didn’t test this myself.

LlamaHub gives you access to a large collection of integrations for LlamaIndex. These include agents, callbacks, data loaders, embeddings, and about 17 other categories. In general, the integrations are in the LlamaIndex repository, PyPI, and NPM, and can be loaded with pip install or npm install.

create-llama is a command-line tool that generates LlamaIndex applications. It’s a fast way to get started with LlamaIndex. The generated application has a Next.js powered front end and a choice of three back ends.

RAG CLI is a command-line tool for chatting with an LLM about files you have saved locally on your computer. This is only one of many use cases for LlamaIndex, but it’s quite common.

The LlamaIndex Component Guides give you specific help for the various parts of LlamaIndex. The first screenshot below shows the component guide menu. The second shows the component guide for prompts, scrolled to a section about customizing prompts.

The LlamaIndex component guides document the different pieces that make up the framework. There are quite a few components.

We’re looking at the usage patterns for prompts. This particular example shows how to customize a Q&A prompt to answer in the style of a Shakespeare play. This is a zero-shot prompt, since it doesn’t provide any exemplars.

Once you’ve read, understood, and run the starter example in your preferred programming language (Python or TypeScript, I suggest that you read, understand, and try as many of the other examples as look interesting. The screenshot below shows the result of generating a file called essay by running essay.ts and then asking questions about it using chatEngine.ts. This is an example of using RAG for Q&A.

The chatEngine.ts program uses the ContextChatEngine, Document, Settings, and VectorStoreIndex components of LlamaIndex. When I looked at the source code, I saw that it relied on the OpenAI gpt-3.5-turbo-16k model; that may change over time. The VectorStoreIndex module seemed to be using the open-source, Rust-based Qdrant vector database, if I was reading the documentation correctly.

After setting up the terminal environment with my OpenAI key, I ran essay.ts to generate an essay file and chatEngine.ts to field queries about the essay.

As you’ve seen, LlamaIndex is fairly easy to use to create LLM applications. I was able to test it against OpenAI LLMs and a file data source for a RAG Q&A application with no issues. As a reminder, LlamaIndex integrates with over 40 vector stores, over 40 LLMs, and over 160 data sources; it works for several use cases, including Q&A, structured extraction, chat, semantic search, and agents.

I’d suggest evaluating LlamaIndex along with LangChain, Semantic Kernel, and Haystack. It’s likely that one or more of them will meet your needs. I can’t recommend one over the others in a general way, as different applications have different requirements.

Open source: free. LlamaParse import service: 7K pages per week free, then $3 per 1000 pages.

Python and TypeScript, plus cloud SaaS (currently in private preview).

Next read this:

Posted by Richy George on 13 June, 2024

35% of enterprise leaders will consider Postgres for their next project, based on this research conducted by EDB, which also revealed that out of this group, the great majority believe that AI is going mainstream in their organization. Add to this, for the first time ever, analytical workloads have begun to surpass transactional workloads.

Enterprises see the potential of Postgres to fundamentally transform the way they use and manage data, and they see AI as a huge opportunity and advantage. But the diverse data teams within these organizations face increasing fragmentation and complexity when it comes to their data. To operationalize data for AI apps, they demand better observability and control across the data estate, not to mention a solution that works seamlessly across clouds.

It’s clear that Postgres has the right to play and deliver for the AI generation of apps, and EDB has taken recent strides to do just this with the release of EDB Postgres AI, an intelligent platform for transactional, analytical, and AI workloads.

The new platform product offers a unified approach to data management and is designed to streamline operations across hybrid cloud and multi-cloud environments, meeting enterprises wherever they are in their digital transformation journey.

EDB Postgres AI helps elevate data infrastructure to a strategic technology asset, by bringing analytical and AI systems closer to customers’ core operational and transactional data—all managed through the popular open source database, Postgres.

Let’s take a look at the key features and advantages of EDB Postgres AI.

Analysts and data scientists need to launch critical new projects, and they need access to up-to-the-second transactional and operational data within their core Postgres databases. Yet these teams are often forced to default to clunky ETL or ELT processes that result in latency, data inconsistency, and quality issues that hamper efficiency-extracting insights.

EDB Postgres AI introduces a simple platform for deploying new analytics and data science projects rapidly, without the need for operationally expensive data pipelines and multiple platforms. EDB Postgres AI’s Lakehouse capabilities allow for the rapid execution of analytical queries on transactional data without impacting performance, all using the same intuitive interface. By storing operational data in a columnar format, EDB Postgres AI boosts query speeds by up to 30x faster compared to standard Postgres and reduces storage costs, making real-time analytics more accessible.

Even if data teams have made Postgres their primary database, chances are their data estate is still sprawled across a diverse mix of fully-managed and self-managed Postgres deployments. Managing these systems becomes increasingly difficult and costly, particularly when it comes to ensuring uptime, security and compliance.

The new capabilities of the recent EDB release will help customers create and deliver value greater than the sum of all the data parts, no matter where it is. EDB Postgres AI provides comprehensive observability tools that offer a unified view of Postgres deployments across different environments. This means that users can monitor and tune their databases, with automatic suggestions on improving query performance, AI-driven event detection and log analysis, and smart alerting when metrics exceed configurable thresholds.

With the surge in AI advancements, EDB sees a significant opportunity to enhance data management for our customers through AI integration. The strategy of the new platforms is twofold: integrate AI capabilities into Postgres, and simultaneously, optimize Postgres for AI workloads.

Firstly, this release includes an AI-driven migration copilot, which is trained on EDB documentation and knowledge bases and helps answer common questions about migration errors including command line and schema issues, with instant error resolution and guidance tailored to database needs.

In addition, EDB remains focused on optimizing Postgres for AI workloads through support for vector databases and AI workloads. With capabilities like the pgvector extension and EDB’s pgai extension, the platform enables the storage and querying of vector embeddings, crucial for AI applications. This support allows developers to build sophisticated AI models directly within the Postgres ecosystem.

In addition, EDB remains focused on optimizing Postgres for AI workloads through support for vector databases and AI workloads. The EDB Postgres AI platform streamlines capabilities by providing a single place for storing vector embeddings and doing similarity search with both pgai and pgvector, which simplifies the AI application pipeline for builders. This support allows developers to build sophisticated AI models directly within the Postgres ecosystem. The platform also enables users to leverage the mature data management features of PostgreSQL such as reliability with high availability, security with Transparent Data Encryption (TDE), and scalability with on-premises, hybrid, and cloud deployments.

EDB Postgres AI transforms unstructured data management with its new powerful “retriever” functionality that enables similarity search across vector data. The auto embedding feature automatically generates AI embeddings for data in Postgres tables, keeping them up-to-date via triggers. Coupled with the retriever’s ability to create embeddings for Amazon S3 data on demand, pgai provides a seamless solution to making unstructured sources searchable by similarity. Users can also leverage a broad list of state-of-the-art encoder models like Hugging Face and OpenAI. With just pgai.create_retriever() and pgai.retrieve(), developers gain vector similarity capabilities within their trusted Postgres database.

This dual approach ensures that Postgres becomes a comprehensive solution for both traditional and AI-driven data management needs.

EDB Postgres AI maintains the critical, enterprise-grade capabilities that EDB is known for. This includes the comprehensive Oracle Compatibility Mode, which helps customers break free from legacy systems while lowering TCO by up to 80% compared to legacy commercial databases. The product also supports EDB’s geo-distributed high-availability solutions, meaning customers can deploy multi-region clusters with five-nines availability to guarantee that data is consistent, timely, and complete—even during disruptions.

The release of EDB Postgres AI marks EDB’s 20th year as a leader of enterprise-grade Postgres and introduces the next evolution of the company—one even more proudly associated with Postgres. Why? Because we know that the flexibility and extensibility make Postgres uniquely positioned to solve for the most complex and critical data challenges. Learn more about how EDB can help you use EDB Postgres AI for your most demanding applications.

Aislinn Shea Wright is VP of product management at EDB.

—

New Tech Forum provides a venue for technology leaders—including vendors and other outside contributors—to explore and discuss emerging enterprise technology in unprecedented depth and breadth. The selection is subjective, based on our pick of the technologies we believe to be important and of greatest interest to InfoWorld readers. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Send all inquiries to doug_dineley@foundryco.com.

Next read this:

Posted by Richy George on 12 June, 2024

Oracle is connecting its cloud to Google’s to offer Google customers high-speed access to database services. The move comes just nine months after it struck a similar deal with Microsoft to offer its database services on Azure. Separately, Microsoft is extending its Azure platform into Oracle’s cloud to give OpenAI access to more computing capacity on which to train its models.

“What started as a simple interconnect is becoming a more defined multicloud strategy for Oracle. The announcement is the beginning of a new trend—cloud providers are willing to work together to serve the needs of shared customers,” said Dave McCarthy, Research Vice President at IDC.

The Oracle-Google partnership will see the companies create a series of points of interconnect enabling customers of one to access services in the other’s cloud. Customers will be able to deploy general-purpose workloads with no cross-cloud data transfer charges, the companies said.

The two clouds will initially interconnect in 11 regions: Sydney, Melbourne, São Paulo, Montreal, Frankfurt, Mumbai, Tokyo, Singapore, Madrid, London, and Ashburn.

Oracle also plans to collocate its database hardware and software in Google’s datacenters, initially in North America and Europe, making it possible for joint customers to deploy, manage, and use Oracle database instances on Google Cloud without having to retool applications.

The two companies will market that service under the catchy name of Oracle Database@Google Cloud. Oracle Exadata Database Service, Oracle Autonomous Database Service, and Oracle Real Application Clusters (RAC) will all launch later this year across four regions: US East, US West, UK South, and Germany Central, with more planned later.

Oracle Database@Google Cloud customers will have access to a unified support service, and will be able to make purchases via the Google Cloud Marketplace using their existing Google Cloud commitments and Oracle license benefits.

The partnership with Google Cloud can be seen as a continuation of Oracle’s multicloud strategy that it started executing with the Microsoft partnership, analysts said, adding that Oracle expects that the new offerings will help many of its customers fully migrate from on-premises infrastructure to the cloud.

By adopting a multicloud approach, Oracle avoids going head-to-head “entrenched” cloud providers. Instead, McCarthy said, Oracle is leveraging its strengths in data management to solve problems that other cloud providers cannot.

Oracle may have been swayed by the experience of its partnership with Microsoft Azure, dbInsight’s chief analyst Tony Baer said. Although AWS may have been a more obvious target to partner with next due to its reach, Google Cloud was probably “more hungry” for a partnership, he said.

McCarthy expected AWS to soon start exploring a similar partnership with Oracle as the Azure and Google Cloud partnerships will put pressure on the hyperscaler.

“AWS faces the same challenges as the other clouds when it comes to Oracle workloads. I expect this increased competition from Azure and Google Cloud will force them to explore a similar route,” he said, adding that migrating Oracle workloads has always been tricky and cloud providers need to offer the combination of Oracle’s hardware and software to allow enterprises to unlock top notch performance across workloads.

Separately, Oracle is partnering with Microsoft to provide additional capacity for OpenAl by extending Microsoft’s Azure Al platform to Oracle Cloud Infrastructure (OCI).

“OCI will extend Azure’s platform and enable OpenAI to continue to scale,” said OpenAI CEO Sam Altman in a statement.

This partnership, according to independent semiconductor technology analyst Mike Demler, is all about increasing compute capacity.

“OpenAI runs on Microsoft’s Azure AI platform, and the models they’re creating continue to grow in size exponentially from one generation to the next,” Demler said.

While GPT-3 uses 175 billion parameters, the latest GPT-MoE (Mixture of Experts) is 10 times that large, with 1.8 trillion parameters, the independent analyst said, adding that the latter needs a lot more GPUs than Microsoft alone can supply in its cloud platform.

Next read this:

Posted by Richy George on 29 May, 2024

Like every other programming environment, you need a place to store your data when coding in the browser with JavaScript. Beyond simple JavaScript variables, there are a variety of options ranging in sophistication, from using localStorage to cookies to IndexedDB and the service worker cache API. This article is a quick survey of the common mechanisms for storing data in your JavaScript programs.

You are probably already familiar with JavaScript’s set of highly flexible variable types. We don’t need to review them here; they are very powerful and capable of modeling any kind of data from the simplest numbers to intricate cyclical graphs and collections.

The downside of using variables to store data is that they are confined to the life of the running program. When the program exits, the variables are destroyed. Of course, they may be destroyed before the program ends, but the longest-lived global variable will vanish with the program. In the case of the web browser and its JavaScript programs, even a single click of the refresh button annihilates the program state. This fact drives the need for data persistence; that is, data that outlives the life of the program itself.

An additional complication with browser JavaScript is that it’s a sandboxed environment. It doesn’t have direct access to the operating system because it isn’t installed. A JavaScript program relies on the agency of the browser APIs it runs within.

The other end of the spectrum from using built-in variables to store JavaScript data objects is sending the data off to a server. You can do this readily with a fetch() POST request. Provided everything works out on the network and the back-end API, you can trust that the data will be stored and made available in the future with another GET request.

So far, we’re choosing between the transience of variables and the permanence of server-side persistence. Each approach has a particular profile in terms of longevity and simplicity. But a few other options are worth exploring.

There are two types of built-in “web storage” in modern browsers: localStorage and sessionStorage. These give you convenient access to longer-lived data. They both give you a key-value and each has its own lifecycle that governs how data is handled:

localStorage saves a key-value pair that survives across page loads on the same domain.sessionStorage operates similarly to localStorage but the data only lasts as long as the page session.In both cases, values are coerced to a string, meaning that a number will become a string version of itself and an object will become “[object Object].” That’s obviously not what you want, but if you want to save an object, you can always use JSON.stringify() and JSON.parse().

Both localStorage and sessionStorage use getItem and setItem to set and retrieve values:

localStorage.setItem("foo","bar");

sessionStorage.getItem("foo"); // returns “bar”

You can most clearly see the difference between the two by setting a value on them and then closing the browser tab, then reopening a tab on the same domain and checking for your value. Values saved using localStorage will still exist, whereas sessionStorage will be null. You can use the devtools console to run this experiment:

localStorage.setItem("foo",”bar”);

sessionStorage.setItem("foo","bar");

// close the tab, reopen it

localStorage.getItem('bar2'); // returns “bar”

sessionStorage.getItem("foo") // returns null

Whereas localStorage and sessionStorage are tied to the page and domain, cookies give you a longer-lived option tied to the browser itself. They also use key-value pairs. Cookies have been around for a long time and are used for a wide range of cases, including ones that are not always welcome. Cookies are useful for tracking values across domains and sessions. They have specific expiration times, but the user can choose to delete them anytime by clearing their browser history.

Cookies are attached to requests and responses with the server, and can be modified (with restrictions governed by rules) by both the client and the server. Handy libraries like JavaScript Cookie simplify dealing with cookies.

Cookies are a bit funky when used directly, which is a legacy of their ancient origins. They are set for the domain on the document.cookie property, in a format that includes the value, the expiration time (in RFC 5322 format), and the path. If no expiration is set, the cookie will vanish after the browser is closed. The path sets what path on the domain is valid for the cookie.

Here’s an example of setting a cookie value:

document.cookie = "foo=bar; expires=Thu, 18 Dec 2024 12:00:00 UTC; path=/";

And to recover the value:

function getCookie(cname) {

const name = cname + "=";

const decodedCookie = decodeURIComponent(document.cookie);

const ca = decodedCookie.split(';');

for (let i = 0; i < ca.length; i++) {

let c = ca[i];

while (c.charAt(0) === ' ') {

c = c.substring(1);

}

if (c.indexOf(name) === 0) {

return c.substring(name.length, c.length);

}

}

return "";

}

const cookieValue = getCookie("foo");

console.log("Cookie value for 'foo':", cookieValue);

In the above, we use decodeURIComponent to unpack the cookie and then break it along its separator character, the semicolon (;), to access its component parts. To get the value we match on the name of the cookie plus the equals sign.

An important consideration with cookies is security, specifically cross-site scripting (XSS) and cross-site request forgery (CSRF) attacks. (Setting HttpOnly on a cookie makes it only accessible on the server, which increases security but eliminates the cookie’s utility on the browser.)

IndexedDB is the most elaborate and capable in-browser data store. It’s also the most complicated. IndexedDB uses asynchronous calls to manage operations. That’s good because it lets you avoid blocking the thread, but it also makes for a somewhat clunky developer experience.

IndexedDB is really a full-blown object-oriented database. It can handle large amounts of data, modeled essentially like JSON. It supports sophisticated querying, sorting, and filtering. It’s also available in service workers as a reliable persistence mechanism between thread restarts and between the main and workers threads.

When you create an object store in IndexedDB, it is associated with the domain and lasts until the user deletes it. It can be used as an offline datastore to handle offline functionality in progressive web apps, in the style of Google Docs.

To get a flavor of using IndexedDB, here’s how you might create a new store:

let db = null; // A handle for the DB instance

llet request = indexedDB.open("MyDB", 1); // Try to open the “MyDB” instance (async operation)

request.onupgradeneeded = function(event) { // onupgradeneeded is the event indicated the MyDB is either new or the schema has changed

db = event.target.result; // set the DB handle to the result of the onupgradeneeded event

if (!db.objectStoreNames.contains("myObjectStore")) { // Check for the existence of myObjectStore. If it doesn’t exist, create it in the next step

let tasksObjectStore = db.createObjectStore("myObjectStore", { autoIncrement: true }); // create myObjectStore

}

};

The call to request.onsuccess = function(event) { db = event.target.result; }; // onsuccess fires when the database is successfully opened. This will fire without onupgradeneeded firing if the DB and Object store already exist. In this case, we save the db reference:

request.onerror = function(event) { console.log("Error in db: " + event); }; // If an error occurs, onerror will fire

The above IndexedDB code is simple—it just opens or creates a database and object store—but the code gives you a sense of IndexedDB‘s asynchronous nature.

Service workers include a specialized data storage mechanism called cache. Cache makes it easy to intercept requests, save responses, and modify them if necessary. It’s primarily designed to cache responses (as the name implies) for offline use or to optimize response times. This is something like a customizable proxy cache in the browser that works transparently from the viewpoint of the main thread.

Here’s a look at caching a response using a cache-first strategy, wherein you try to get the response from the cache first, then fallback to the network (saving the response to the cache):

self.addEventListener('fetch', (event) => {

const request = event.request;

const url = new URL(request.url);

// Try serving assets from cache first

event.respondWith(

caches.match(request)

.then((cachedResponse) => {

// If found in cache, return the cached response

if (cachedResponse) {

return cachedResponse;

}

// If not in cache, fetch from network

return fetch(request)

.then((response) => {

// Clone the response for potential caching

const responseClone = response.clone();

// Cache the new response for future requests

caches.open('my-cache')

.then((cache) => {

cache.put(request, responseClone);

});

return response;

});

})

);

});

This gives you a highly customizable approach because you have full access to the request and response objects.

We’ve looked at the commonly used options for persisting data in the browser of varying profiles. When deciding which one to use, a useful algorithm is: What is the simplest option that meets my needs? Another concern is security, especially with cookies.

Other interesting possibilities are emerging with using WebAssembly for persistent storage. Wasm’s ability to run natively on the device could give performance boosts. We’ll look at using Wasm for data persistence another day.

Next read this:

Copyright 2015 - InnovatePC - All Rights Reserved

Site Design By Digital web avenue

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

IDG

EDB

EDB